Addressing Complex Software Systems with Microservices and CI/CD

For a monolithic application—delivered as a single cohesive unit of code—it may take just 2–3 years to become an outdated “black box” that is hard to support and maintain. Over time, a major update may require redeployment of the entire system, while a single failure may turn into a disaster and, eventually, downtime. A once chosen technology stack becomes a rigid structure, hardly able to fit in new components.

This article describes a more reasonable approach to building a software architecture to solve the problem—based on microservices, continuous integration, and related practices. It was not supposed to be a deep technical dive—instead, it was intended to provide a high-level overview to help your CEO/CIO stop doing the things the outdated way.

What’s important?

Requirements may vary, depending on a product or a specific industry, however, most enterprises focus on the essentials as described below when building complex large-scale systems.

Because innovating ahead of competitors is a matter of survival for businesses, they need to be able to deliver apps fast and update often. It should also be easy for a company to maintain a system after it is in the production stage while introducing new features as the software evolves.

High availability is of major importance, too, comprising fault tolerance, automated disaster recovery and backups, etc. Scalability of a system should guarantee its ability to serve a growing amount of users, requests, and data.

Transparency across the technology stack and processes should make it possible to detect and eliminate problems as soon as they occur by monitoring essential technical and business metrics. Most enterprises are strict about security, which covers protection of sensitive data (or devices), prevention of unauthorized access, etc. This requirement becomes even more important as the system grows in size and complexity.

The above mentioned is hardly a complete list of requirements, which vary depending on a specific industry and type of business. For a healthcare project, for instance, regulations will also matter, which may be different in various regions. The reality is that every large business—even a traditional one, such as a clothes retailer or an 80-year-old insurance company—is now a software business, managing and delivering apps to stay ahead of the curve.

What’s wrong with monoliths?

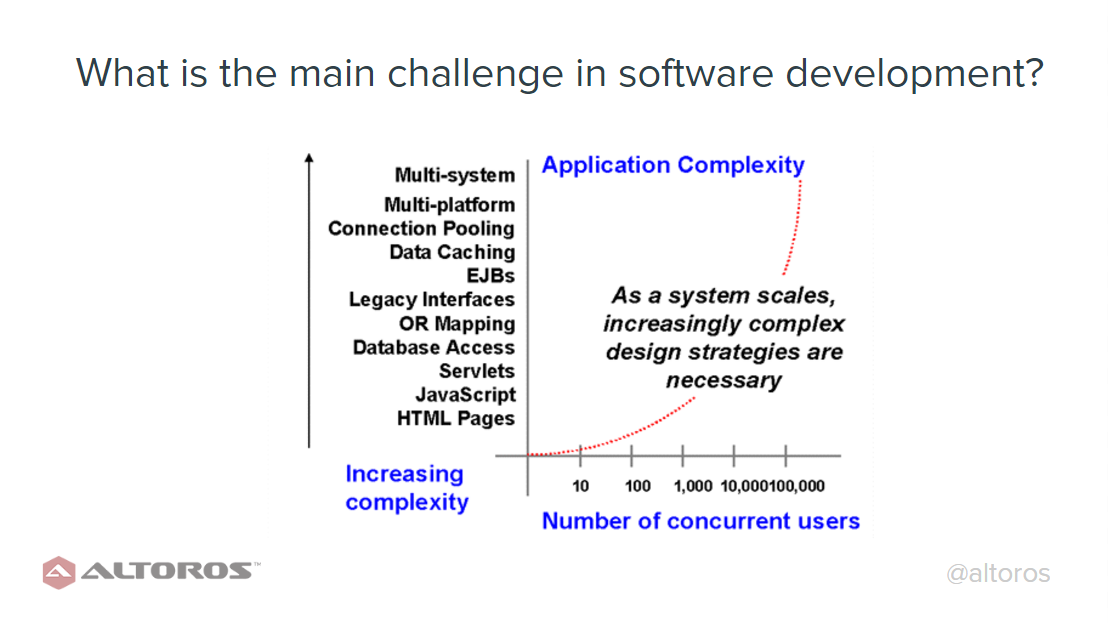

Although a monolithic architecture may meet most of the essential requirements when just launched, it eventually ends up facing some (or all) of the following challenges as the system grows:

- Long release cycles. Over time, the system’s code becomes more and more complex, with introduction of new features getting harder and longer. As a result, adding even a minor update may take weeks or months of work, yet requiring engineers to rebuild and redeploy the entire monolith. Frequent changes are too bulky to handle, since developers have to thoroughly review the existing code to avoid mistakes and system instability.

- A monolithic architecture is hard to maintain. As time passes, technologies tend to become obsolete. For a monolithic architecture, however, adopting a new framework or language often becomes a task nearly impossible to accomplish. In many cases, developers have to stick to a chosen technology stack, as migrating to another one may require rewriting the entire application.

- Limited scalability. To scale horizontally, companies build sophisticated load balancing models, otherwise the only way to go about it would be to scale vertically—by buying more hardware. Both scenarios are not only time- and resource-consuming, but also have physical limitations.

- Low resilience. A fault in a monolithic architecture can turn into a total crash, when the entire system is down. Lacking proper scaling due to a rigorous architecture, a monolith also runs high risks of failure at unexpected load (or traffic) spikes. On top of that, introduction of new functionality may cause new bottlenecks in the code base, making the system even more unstable.

- Insufficient monitoring and prediction. Lack of transparency in a monolithic architecture makes it difficult to discover the actual cause of a fault or find an efficient remedy for it. Very often, monolithic architectures provide only a general insight into the inner workings of a system, without the ability to measure performance or status of a particular feature. Therefore, recovery from a fault may become a time-consuming process associated with significant financial losses while the system is not operating as it should.

For a more detailed comparison of monolithic and microservices-based architectures, as well as the pros and cons of the both, read our technical guide on the topic.

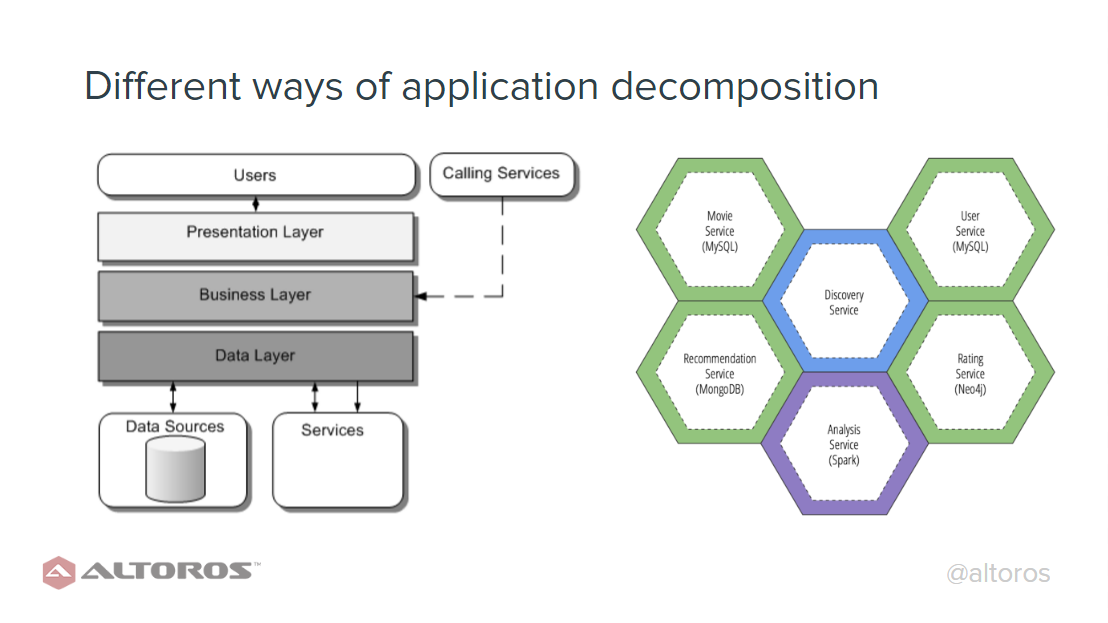

Changing the architectural approach to innovate faster

One of the efficient ways to address the challenges described earlier would be to adopt a microservices-based approach—by decomposing a monolithic architecture into a number of loosely coupled smaller parts. Each of the parts is delivered in accordance with a domain-driven design and the 12–factor app concept. Some of the results that these approach brings:

- Independence of services. Each microservice is a small-size, separately deployed delivery unit with its own simple business logic. So, developers in a team can add new features independent of other functional teams and update without affecting the entire system.

- Isolated execution. Microservices run as isolated single-purpose applications with explicit dependencies. Due to this, it is possible to employ the containerization technology (e.g., Docker, Warden, or Rocket) to ensure a more efficient use of computational resources.

- Flexible scaling options. When components in a system are implemented as microservices, scaling does not have to cover the total of them. One can scale up only specific services as needed.

- Autonomous teams. As a rule, the system is divided into multiple components corresponding to functional teams focusing on different business units/features—e.g., finance, customer service, delivery, etc. Teams can work rather independently—each on its own part of functionality and services with their preferred technologies. In case of distributed teams, it is a critical advantage, as they can disregard the time difference and collocation.

- Choice of technology. A microservices architecture gives teams the freedom to use multiple data storages (MySQL, CouchDB, Redis, etc.), languages (Java, Ruby, Python, Go, etc.), and frameworks (Spring Boot, Java EE, etc.). This facilitates migrating from one technology stack to another and combining various technologies within one stack to build different system components.

- Fault tolerance. Although a system based on microservices comprises multiple small units that can fail, the units are loosely coupled. So, it takes less time and efforts to identify the exact system component responsible for a failure and to find a way to fix it.

Microservices communicate with each other via message brokers (such as RabbitMQ) and APIs. Note that there is a variety of frameworks enabling developers to speed up and automate API implementation.

A special type of microservices-based solutions feature event-driven architectures, where services are triggered by notable events. Although such architectures are more difficult to implement, they enable efficient management of computational resources (e.g., with OpenWhisk), while saving money due to flexible charging.

An integrated deployment workflow

Containing numerous components, a microservices-based architecture implies that the integration and deployment processes are becoming more challenging to manage. Here’s where continuous integration and continuous delivery step in.

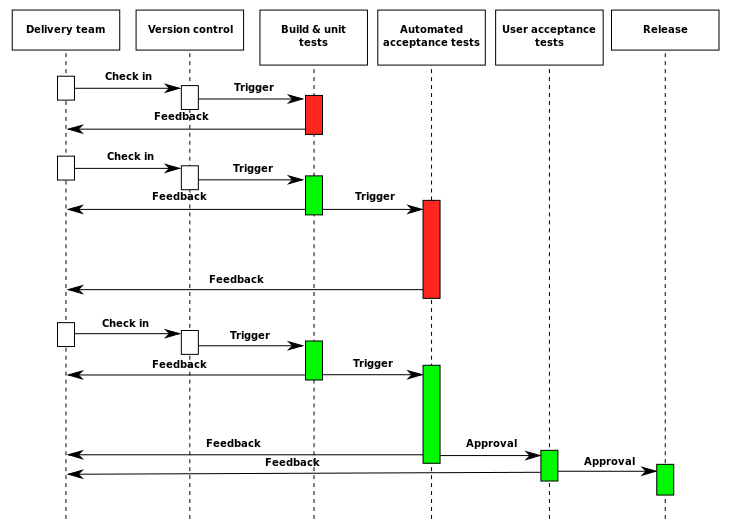

Continuous integration (CI) is understood as a practice requiring developers to merge their code to a shared repository, at least, on a daily basis.The practice implies maintaining a single code base with a revision control system (e.g., Git, Mercurial, Subversion) and the need to keep committing changes at a reasonable frequency to avoid integration conflicts. Frequent integrations make it possible to locate and eliminate errors in the source code faster and easier, focusing more on development than bug fixing. The CI practice also involves automatic integration tests that are run on a CI server as soon as it detects a new commit.

Continuous delivery (CD) is an engineering approach—continuous integration being part of it—that provides the ability to build and release software safely and quickly in a sustainable way. The approach requires implementing a deployment pipeline and involves the following:

- Automating delivery of builds, possibly making it a matter of just a couple of clicks. Such tools as Capistrano, Maven, and Gradle can assist in achieving the goal.

- Ensuring traceability of builds.

- Enabling automatic testing of builds and rapid feedback on code issues.

- Keeping software deployable throughout a development life cycle.

- Automating deployment and release of software versions.

Automation of testing is key for continuous integration and continuous delivery to work properly, as it reduces substantially the duration of a development life cycle. Those are the types of tests used in a CI/CD pipeline:

- unit tests (with Unit, NUnit, or TestNG)

- acceptance tests (using Cucumber)

- user interface tests (e.g., Ranorex, TestComplete)

- end-to-end tests (with WebDriver)

Continuous delivery in action (Image credit)

Continuous delivery in action (Image credit)A CI/CD pipeline can be implemented using a standardized tool (e.g., Jenkins, Travis CI, or Concourse). In addition, it often requires creating a DevOps culture within an organization, fostering communication and collaboration of developers with operations engineers and management. Read the blog post to learn about the related tips and challenges.

Easier on a Platform-as-a-Service

Although microservices and Platform-as-a-Service (PaaS) do not necessarily require each other, they work together well. A PaaS like Cloud Foundry (including all of its distributions, such as IBM Bluemix, Pivotal CF, GE Predix, Stackato, etc.) takes away a lot of headache associated with managing microservices. It addresses such issues as implicit interfaces, operational overheads, and operational complexity.

A PaaS ensures high-level automation, simplifying deployment and management of microservices:

- Developers do not have to worry about infrastructure, but can focus on the code instead.

- Application runtimes are automatically deployed to isolated containers, which, above all, makes it possible to achieve loose coupling of microservices.

- With a build-automation solution deployed to a PaaS as a regular application, delivery of microservices becomes a continuous and a way less time-consuming process.

- Service binding via a service broker makes it easier to integrate an application with an external service.

In addition to backup and disaster recovery, a PaaS like Pivotal CF may offer self-healing and deployment capabilities that can help to achieve high availability with:

- restoring failed application instances

- restarting failed platform processes

- recreating failed virtual machines

- deploying app instances and components to different availability zones

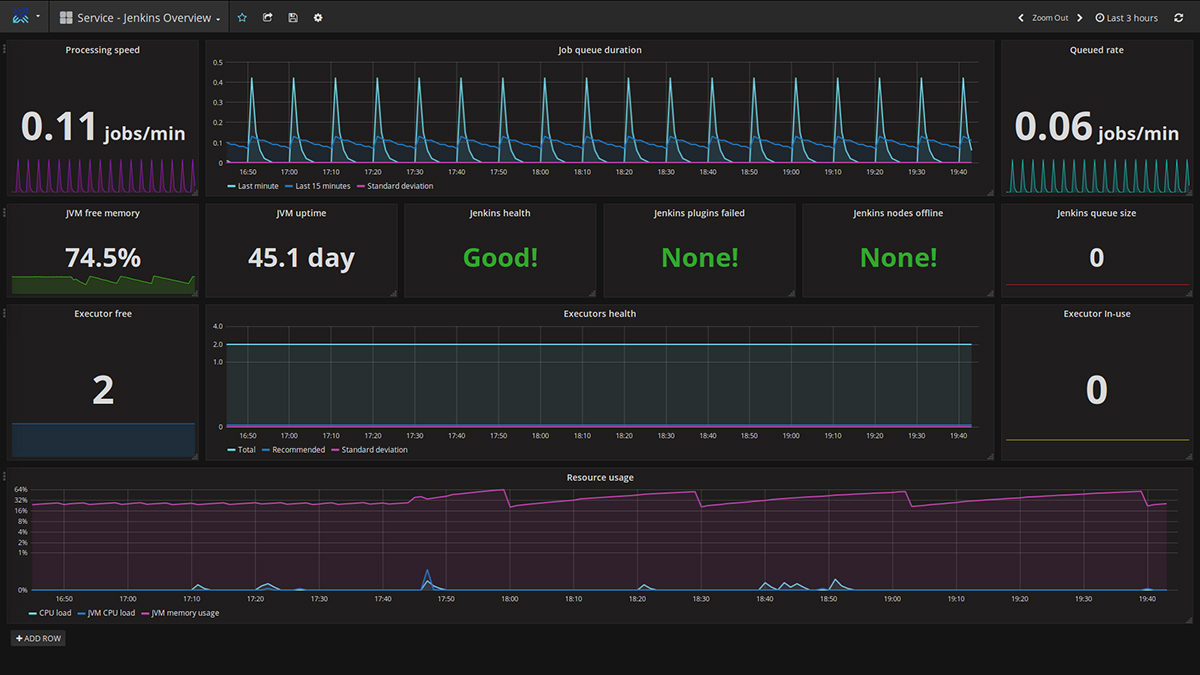

A PaaS may also include tools that simplify monitoring performance of microservices. Altoros has recently introduced a tool for PaaS monitoring—Heartbeat. Featuring logging, alerting, and metrics visualization capabilities, the solution helps to track the status of an IaaS, PaaS components, third-party services, containers, and apps.

Metrics visualization with Heartbeat

Metrics visualization with Heartbeat

Real-life implementations at large organizations

So far, introducing microservices and a CI/CD pipeline has helped numerous organizations to optimize their business processes, manufacturing workflows, and product delivery.

GAP—a global fashion business—adopted a microservices-based approach as part of its strategy aimed at optimization of pricing in local stores around the globe. The new approach has brought a major change to the corporate development workflow, speeding up and automating deployment via a federated continuous integration pipeline.

Using microservices on top of a PaaS, BNY Mellon and Allstate, major players in banking and insurance accordingly, made the application delivery process times faster and easier, while meeting strict requirements of the highly regulated and traditional industries.

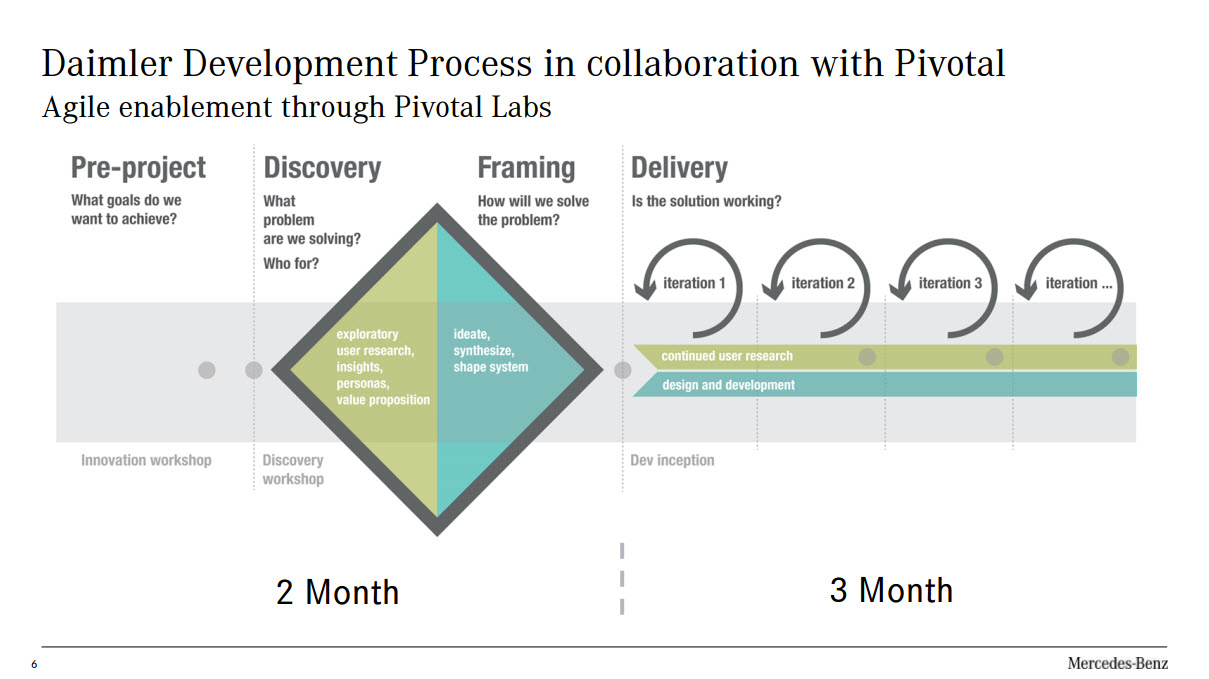

Relying on the Cloud Foundry platform, microservices, and continuous delivery, Daimler was able to iterate more frequently and integrate early customer feedback into the development process. As a result, a new release of its smart Mercedes me app was pushed into production in just six months.

Daimler making iterations more frequently

Daimler making iterations more frequentlyMicroservices also helped to accelerate delivery of services to multiple organizations from the public sector: US government administration, the Dutch government, San Francisco University, etc.

Based on microservices, GE built an IoT dashboard to improve the legacy processes at its manufacturing premises, while Cisco delivered the Collaboration Cloud—a virtual conference platform that was used to create the “Wall of America.”

Having built a CI/CD pipeline, Comcast and DigitalGlobe from the telecommunications industry managed to substantially increase development speed.

Unigma is one of the most recent projects where Altoros—partnering with a managed infrastructure services provider—delivered a multi-tenant cloud platform. Using the Bluemix platform and microservices, the company was able to release a full-fledged system in just 6 months.

Where to start?

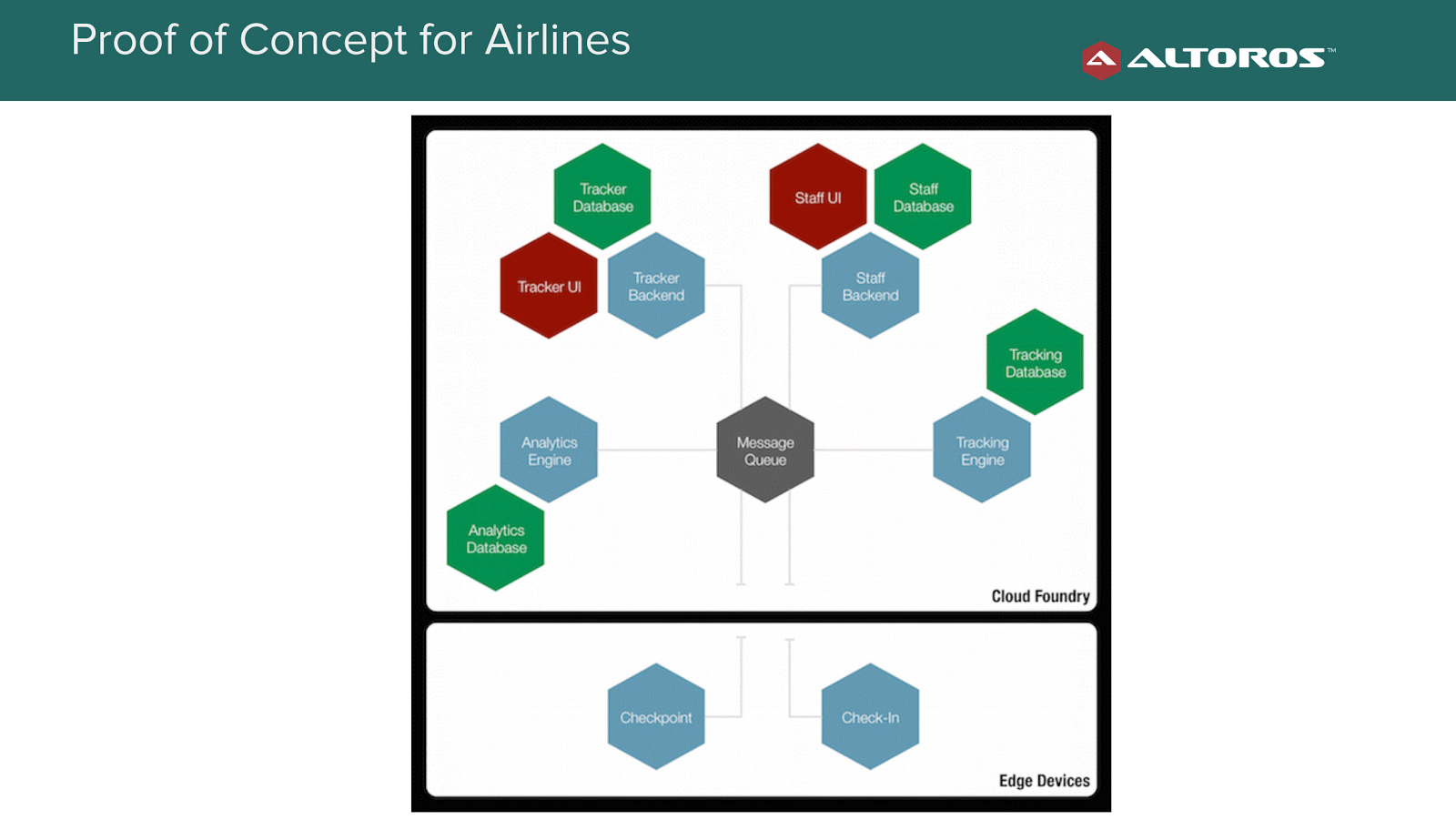

Building a proof of concept is one of the best ways to see microservices and a CI/CD pipeline in action. Prototyping enables to try out a technology stack against the existing infrastructure and to see how a system will perform without having to actually build it. This saves a lot of trouble with identifying and eliminating possible bottlenecks and risks.

For instance, Altoros—together with GE, Oracle, and M2Mi—is participating in the Smart Airline Baggage Management Testbed. The initiative aims at building a prototype of an IoT system to reduce baggage mishandling at airports, using microservices on top of GE’s Predix platform. The prototype enables airlines to evaluate how RFID-based baggage tracking can ensure compliance with Resolution 753 of the International Air Transport Association, which should be achieved by June 2018.

Another example of rapid prototyping is an automation system for greenhouse farming. In this case, using GE’s Predix as a PaaS helped Altoros to create a microservices-based prototype in just 48 hours.

Today, enterprises are subject to disruption by smaller innovative startups, only few will still be alive in the course of the decade. So, the ability to transform faster becomes key to survival. Microservices, a CI/CD pipeline, and a PaaS can help companies to outperform their competitors in the long run. Or, at least, to remain in the business.

If you need to identify bottlenecks in your complex infrastructure

or improve performance of a monolith, contact us to start a change.

References

- “The Twelve-Factor App” (Adam Wiggings)

- “Migrating to Cloud-Native Application Architectures” (Matt Stine)

- “Domain-Driven Design: Tackling Complexity in the Heart of Software” (Eric Evans)

- “Microservices vs. Monolithic Architectures: the Pros and Cons and Cloud Foundry Examples” (Sergey Sverchkov)

- “Continuous Integration” (Martin Fowler)

- “Continuous Delivery: Reliable Software Releases Through Build, Test, and Deployment Automation” (Jez Humble, David Farley)