2016–2017 Trends: Neo-AI and Neo-Luddism

All Trends | A “Varietal Cloud” and PaaS | Neo-AI and Neo-Luddism |

Blockchain | Smart Cities | Industry 4.0 | Open Source

Trend #8: Neo-AI

The past year witnessed a dramatic spike in artificial intelligence (AI) development. IBM has centered its entire strategy around Watson, its AI-related project, and other vendors are also committed to it.

People with an acquaintance of these developments also know that this is the third big AI cycle. The first two are well documented, and in the end came to fail from a lack of an effective approach to the problem and limitations of contemporaneous hardware.

Today, we seem to have enough hardware firepower to make the current AI wave something that will continue and not simply crash against the shore again. However, do we have an effective approach, even after all these years?

Don’t believe everything you read

I met AI pioneer Marvin Minsky and a few people, who followed him around at a Microsoft CD-ROM conference (yes, there was such a thing) in Seattle in the late 80s. He had recently published his book, Society of Mind, which envisions the human brain as a collection of agents, each doing a small job that collectively adds up to human cognition and intelligence.

I still find it to be unconvincing and pretty weak broth compared to the philosophical work about the mind and how it works by Kant and his successors since the 18th century, as well (of course) as the work of Freud and all that have succeeded him. However, Marvin won a Turing Prize and many other honors, so what do I know?

Early efforts were arrogant

Marvin Minsky was part of that first AI wave, which was generated at a conference at Dartmouth College in 1956, out of which came the pronouncement, “every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.”

This appears comically arrogant on at least two grounds, and should have appeared so in 1956.

The first ground is that intelligence (or human intelligence, which is what is meant here) is a complex convergence of stimuli that defies being broken into simple “aspects” to be simulated into anything resembling a human mind.

The second ground is what compels people to value human intelligence as something that is desirable to replicate. The machines of the Industrial Revolution—and even the simple tools humans built a quarter million years ago—aim to multiply (often by magnitudes) the physical ability of humans.

Calculators and our data-processing machines for the past 60 years do the same for simple mental functions. Why would we then, with AI, simply wish to replicate our own, well-known human cognitive limitations? Following that thought, how dangerous would it be to try to exceed our cognition by magnitudes?

A more useful approach today

Fortunately, it looks as if today’s AI and its siblings, machine learning (ML) and deep learning (DL), are focused on specific elements of what is often called “cognition.” IBM with its Deep Blue program, now metamorphosed into Watson, is the prime example.

I can say with confidence that I was never impressed by Deep Blue finally beating Garry Kasparov in chess, or with Watson winning Jeopardy. I regard those as cheap circus tricks, accomplished through a combination of brute force and smoke and mirrors. I’m not impressed by Watson talking to Bob Dylan either. I would be impressed if Watson would write a song equal to “Blowin’ in the Wind.”

The good news is that the real work of Watson is less stagey and more useful. IBM acquired the Weather Company’s massive data resources, so that it can directly glean meteorological insights that will benefit the energy, transportation, agricultural, and even manufacturing, retail, and other major economic sectors. Watson is also being put to use to look at the spectrum of healthcare imaging, and the diagnoses that follow.

Enter TensorFlow into the picture

Watson is not the only AI game in town. The open-source TensorFlow technology, for example, was shared by its developer Google early in 2016. TensorFlow can be used to build simple neural networks, and amplify that with more complex recurrent and convolutional networks. At the recent TensorFlow webinar, Dipendra Jha of Northwestern University provided numerous code examples of the technology in action.

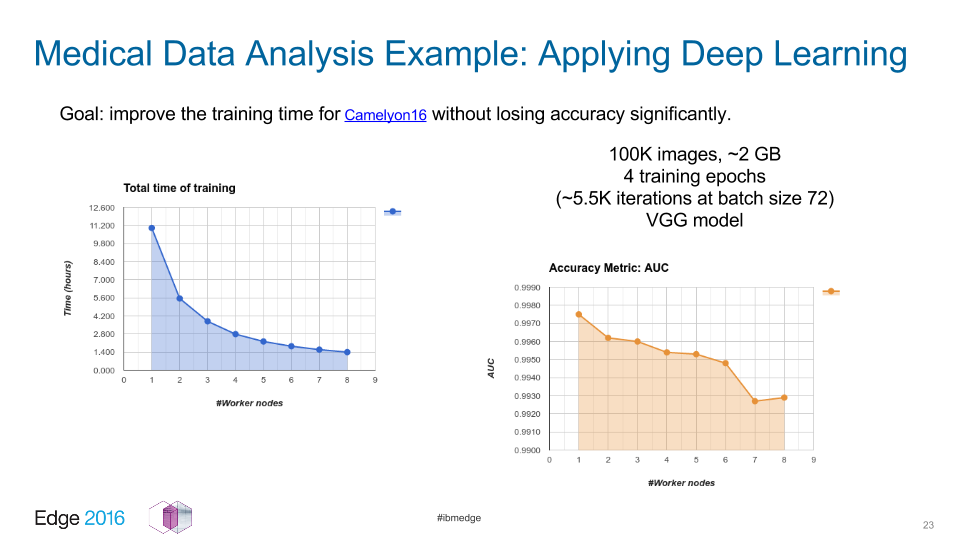

Another TensorFlow use case was presented in 2016 at the IBM Edge conference in Las Vegas by IBM engineer Indrajit Poddar and Altoros CTO/co-founder Andrei Yurkevich. This prototype project involved diagnosing 100K high-res medical images.

The specific use cases offer hope that AI and its siblings can indeed multiply the most useful aspects of human cognition, without the vainglorious idea of creating artificial humans. However, the topic still causes some fear and loathing for many people, especially when business leaders tout its ability to replace humans. This leads to the next big topic on this list…

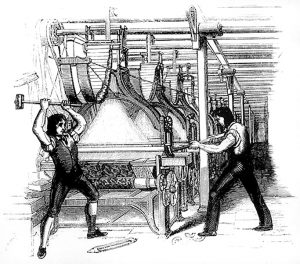

Trend #7: Neo-Luddism

Source: learnhistory.org.uk

The Luddites have been misunderstood. A phenomenon of the Industrial Revolution in 19th century England, the Luddites were textile workers who weren’t against technology per se, but against the specific way industrialists were using it to change and eliminate jobs.

Bernie Sanders would be considered a Luddite by this definition, as would Donald Trump, and as would Hillary Clinton and Barack Obama. All blame technological progress for the declining value of industrialized manual labor, and all have made promises at one time or another to restore the glory days of the great industrial heartland of the United States.

Donald Trump’s recent victory in the 2016 US Presidential election came from slim majorities of modern-day Luddites in this region. Similar sentiments drove the Brexit movement in the UK.

Industrial wasteland

I grew up and now live in Northern Illinois, an area that started seeing disturbing signs of negative change as far back as the 1970s, and has lost 40% of its manufacturing jobs since the year 2000. The particular region in which I grew up gave Obama a slim majority in 2008 and 2012, but went almost 2 to 1 for Trump in 2016.

It’s a helpless feeling trying to effect change in an area like this. All of the economic development studies, workshops and seminars, community panels and photo ops with politicians almost invariably come to nothing.

A local steel mill once provided 3,000 jobs; it was replaced by a newer-technology mill that employs 10% of that amount. A military proving ground that provided 4,000 jobs vanished in the base closings of the Clinton Administration, and nothing has replaced it in more than 20 years. Several small automotive parts manufacturers have been whittled down to a couple.

Meanwhile, agriculture has changed from a county with approximately 1,600 sub-sections of 160-acre family farms to a few dozen partnerships and corporations owning thousands of acres apiece.

Population continues to be in decline, school districts have been consolidated only to see student counts in the combined districts continue to shrink, and the routine discovery of meth labs in towns of 500 people illustrates a harsh despair in the land that simply was not there when I grew up there or even when my grandparents and parents lived through the Depression there.

The problem is not just rural

Continued urbanization may be a foregone conclusion, and there may be no saving the smaller towns. Still, the Trump vote extended well into the big cities. This does not become clear in Illinois (Hillary’s original home state), where Cook County (in which Chicago resides) dominated the overall state in typical fashion and gave its votes to Hillary.

If you look a few miles north of where I live to Wisconsin, things come into focus. Outside of five urban counties containing Milwaukee and the Madison area, Obama took 27 counties in 2012, compared with only 7 for Hillary in 2016. Within Milwaukee, Hillary’s margin of victory was 15,000 votes fewer than Obama’s margin in 2012. Trump took the states by 22,748 votes.

In other words, Milwaukee contributed to about two-thirds of Trump’s victory margin. He received 29,000 fewer votes than Romney in Milwaukee County, but Hillary received a catastrophic 44,000 fewer votes in the county than Obama in 2012. Similar patterns are found in Michigan and Pennsylvania, the two other industrialized states that swung the election.

So, what’s the answer?

Perhaps manufacturing under the rubrics of the Industrial Internet of Things and Industry 4.0 can rejuvenate the region. Two things are needed:

- A consistent commitment to educating young people to be equipped for the challenges of neo-manufacturing. This means strong math skills, extending to understanding statistics and graphs; strong language skills, to understand the complexity and nuance required to communicate with these new machines.

- A strong work ethic to understand that there is no job or career success without a long, hard pull.

The flip side of this notion is to have honest conversations from employers. Is there really an intention to provide well-paid jobs for large numbers of people? It’s understood that much labor can be accomplished outside of North America at a fraction of local costs. Is there an end to that thinking, i.e., at which points do job skills merit high levels of pay? Or are we really in an unending race to the bottom across all job skills and positions?

Next up: Blockchain Proliferation and the ZettaStructure.