Machine Learning Constitutes 65% of Kubernetes Workloads

How containers changed the software life cycle

Prior to containerization, software code was traditionally developed in specific computing environments. This meant that transferring code to a new environment was challenging and often resulted in bugs and errors. Containerization removed this problem by putting software code along with its configuration files, libraries, and dependencies into a single package referred to as a container. The container is then abstracted, making it portable and able to run on any platform.

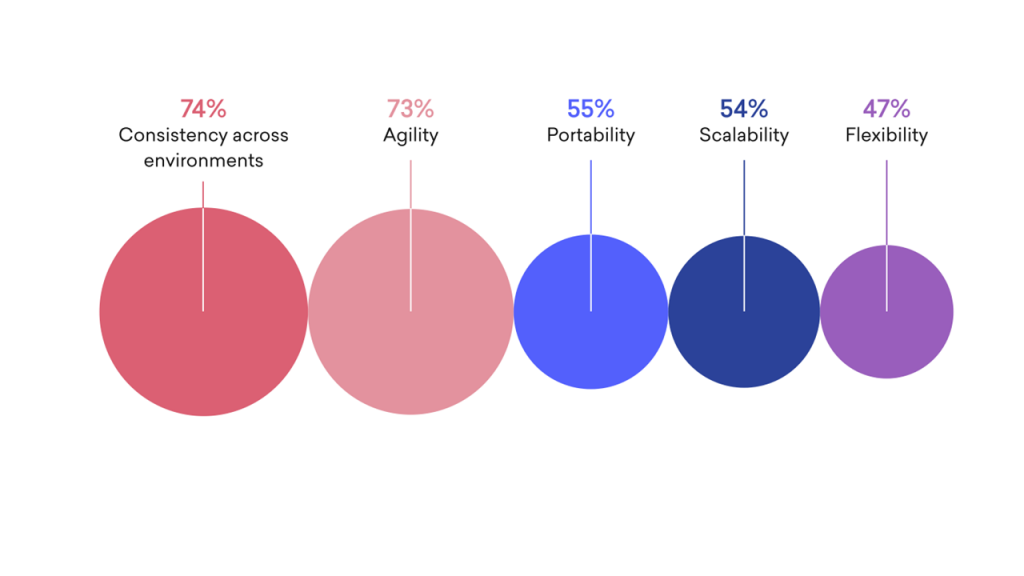

Besides portability, many organizations these days use containers for a variety of reasons, including:

- Agility. A container can be deployed quickly, improving the rate at which teams can move code from development to production.

- Resilience. By breaking up applications into fine-grained components, developers can deploy new functions or modify existing ones without affecting other functions.

- Scalability. Unlike virtual machines, which require starting an operating system before any work can be processed, containers are started and stopped within a running operating system, meaning they can be deployed or removed in seconds.

- Consistency. Regardless of what is in a container, the method for building, deploying, and upgrading containers in the exact same manner.

Why organizations deploy workloads on containers (Source: Red Hat)

Why organizations deploy workloads on containers (Source: Red Hat)As deployments grow bigger, managing more and more containers gets increasingly complex, especially as companies started adopting multicloud strategies. To streamline deployment and management at a large scale, container orchestration solutions like Kubernetes have become a necessity. (Recently, we’ve covered how Airbnb deploys 125,000 times per year with Kubernetes and how Lyft orchestrates 300,000 containers.)

Container orchestration solutions provide several benefits that help with management and deployment, including:

- automated updates and upgrades

- reduced errors with development and life cycle management

- faster and simplified app deployment

- reduced reliance on large teams

Containers have drastically changed the life cycle of software development. In this blog post, we analyze the reports from industry leaders to gain insights into the state of containerization and Kubernetes in the cloud-native space.

Adoption grew by 67% in 2021

According to a recent report from the Cloud Native Computing Foundation (CNCF), the developer population grew to an estimated 6.8 million in 2021. However, the amount of back-end developers involved in the cloud-native space decreased by 3%.

Despite the slight decrease, the adoption rate of containers among back-end developers remains largely the same. This could mean that containerization has reached a plateau. On the other hand, Kubernetes experienced continued growth in 2021. 5.6 million developers used Kubernetes in the previous year, demonstrating a 67% increase from the 3.9 million developers in 2020.

This growth in Kubernetes adoption is echoed by other sources. A report on the state of Kubernetes security by Red Hat found that 88% of their respondents used Kubernetes for container orchestration. A report by D2IQ about Kubernetes adoption by enterprises cited that 75% of organizations already use the technology.

Kubernetes adoption has room for growth (Source: CNCF)

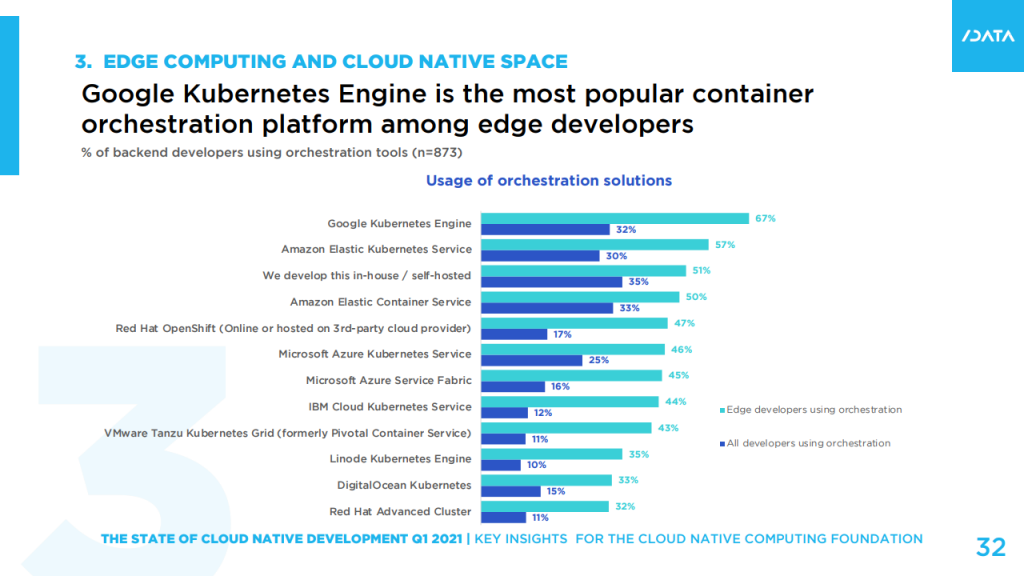

Kubernetes adoption has room for growth (Source: CNCF)Looking at container orchestration, the most adopted platforms were:

- self-hosted solutions (35%)

- Amazon Elastic Container Service (33%)

- Google Kubernetes Engine (32%)

- Amazon Elastic Kubernetes Service (30%)

Usage across constrainer orchestration solutions (Source: CNCF)

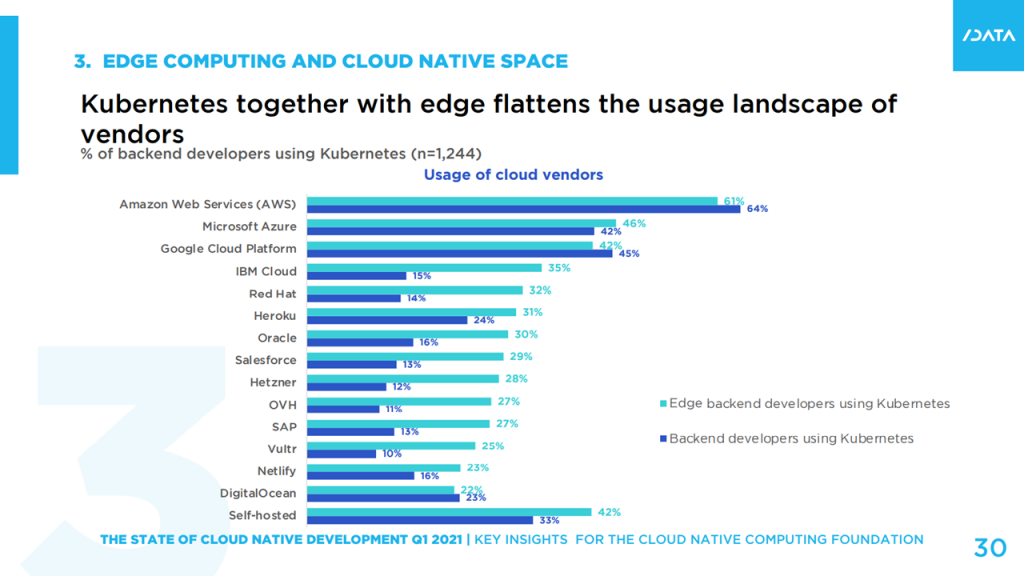

Usage across constrainer orchestration solutions (Source: CNCF)The CNCF report also highlighted cloud vendors that Kubernetes users preferred. The top three cloud service providers among back-end developers were:

- Amazon Web Services (64%)

- Google Cloud Platform (45%)

- Microsoft Azure (42%)

Usage across vendors (Source: CNCF)

Usage across vendors (Source: CNCF)

Most common use cases

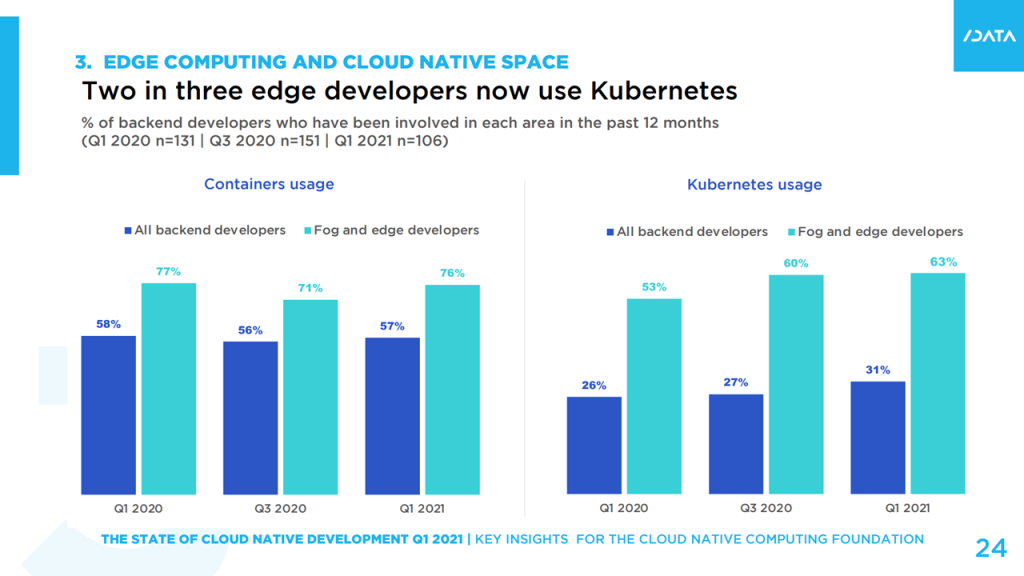

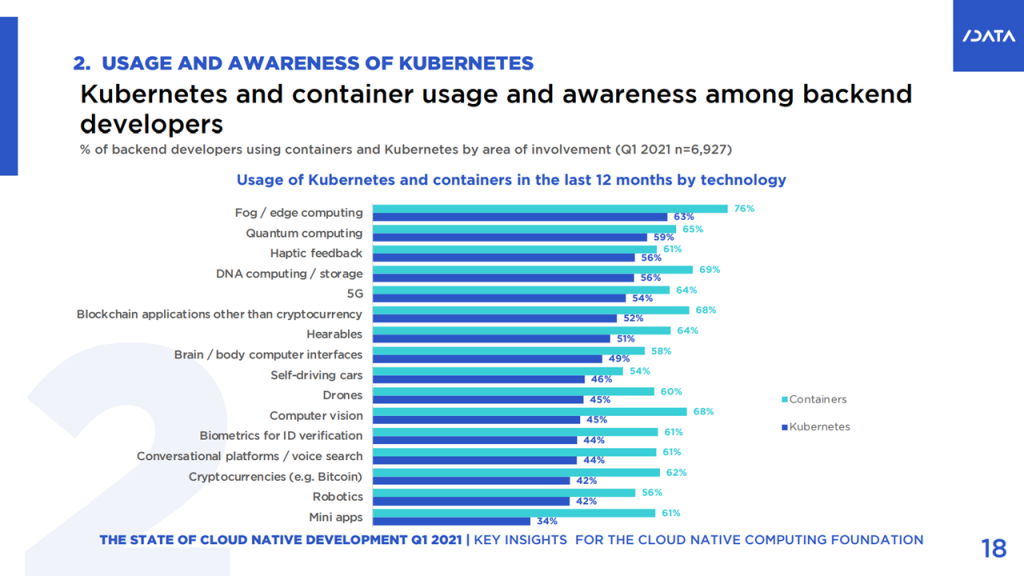

With widespread implementation, Kubernetes is being used across various sectors. In particular, edge computing had the highest usage rates at 63%. (For instance, you can read about how Osaka University utilizes Kubernetes and machine learning to reduce power consumption in edge computing.)

Kubernetes and containers usage by domain (Source: CNCF)

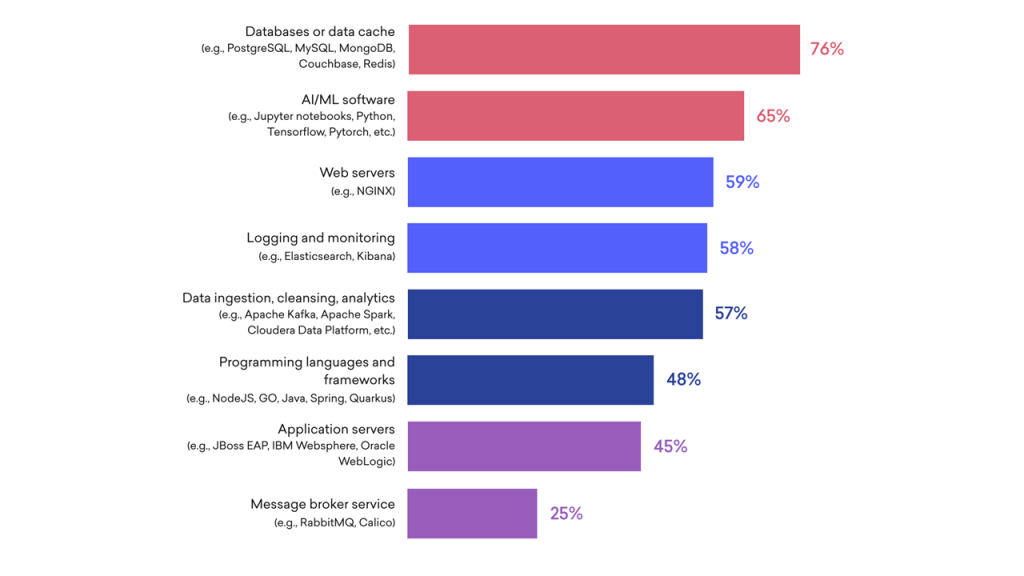

Kubernetes and containers usage by domain (Source: CNCF)Apart from edge computing, Kubernetes is gaining popularity across other workloads. A report on the state of container workloads by Red Hat found that 76% of the respondents deployed database or data cache systems on Kubernetes and 65% used the technology for artificial intelligence and machine learning workloads. Those were closely followed by web servers at 59%, logging and monitoring solutions at 58%, as well as data ingestion/cleansing/analytics at 56%.

Major types of workloads and systems containerized with Kubernetes (Source: Red Hat)

Major types of workloads and systems containerized with Kubernetes (Source: Red Hat)A report from D2IQ had similar findings, where many organizations were using Kubernetes to run machine learning workloads. We can make a strong assumption that Kubernetes is gradually becoming the platform of choice for artificial intelligence and machine learning workloads. (In our blog post, we detail how Shell builds 10,000 machine learning models daily using Kubernetes.) The top workloads in the D2IQ report were:

- machine learning (43%)

- application build structures (40%)

- distributed data services (33%)

Organizations that have adopted Kubernetes and are currently using it in production are also on an upward trend. The D2IQ report found that on average, an organization runs 53% of all its projects in production on Kubernetes, an increase from 42% in 2020.

Additionally, the time frame to move Kubernetes platforms to production environments is getting smaller. 77% of organizations reported that it took six months or less to do so. The average time it took was four and a half months. As to where Kubernetes is being run, most organizations (47%) are opting for hybrid environments.

Most organizations run containers on multicloud (Source: Red Hat)

Most organizations run containers on multicloud (Source: Red Hat)

Challenges during adoption and in production

While more and more organizations are adopting Kubernetes, 100% of the D2IQ’s survey respondents said they had experienced certain challenges along this journey. The top three included:

- the lack of IT resources (36%)

- effective scaling (34%)

- keeping up with the rapid advancement of underlying technologies (33%)

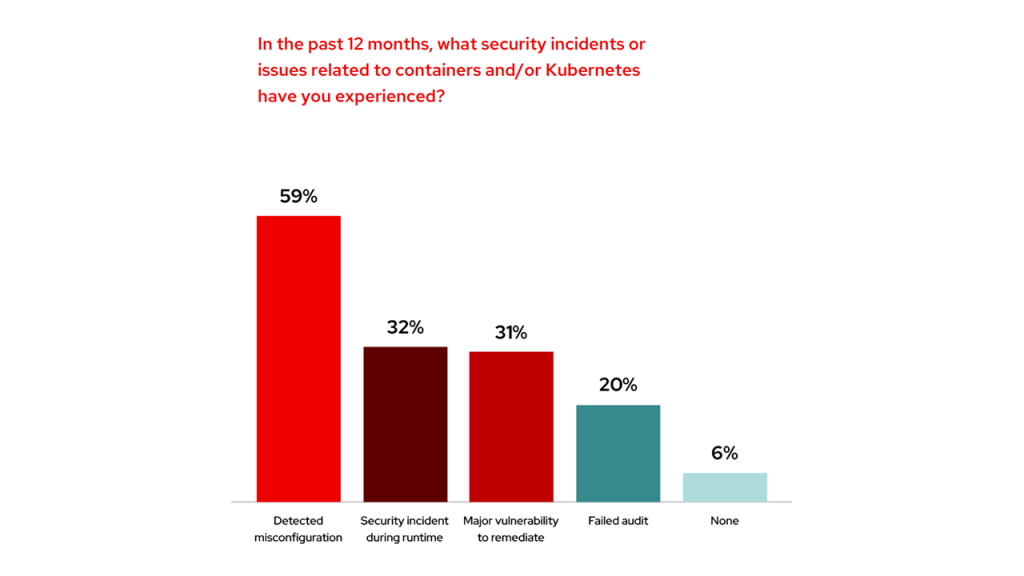

When moving Kubernetes workloads to production environments, the major three challenges become security, production environment reliability, and difficulties in troubleshooting. The report by Red Hat further emphasizes security as a top challenge with 94% of survey respondents having experienced a security incident in their Kubernetes and container environments in the past 12 months.

Security incidents in the last 12 months (Source: Red Hat)

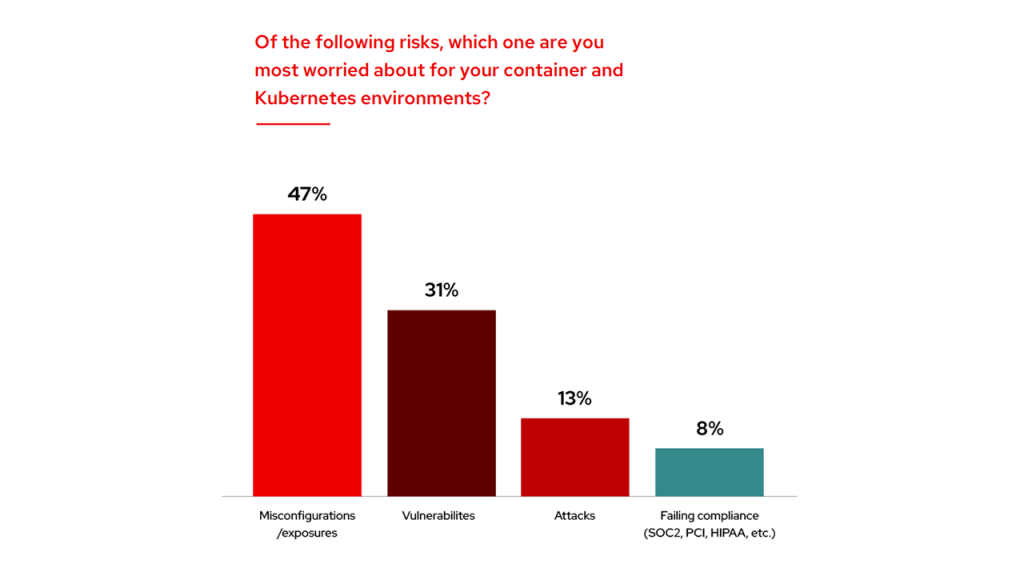

Security incidents in the last 12 months (Source: Red Hat)Looking at specific security concerns, most respondents (47%) are worried about exposures due to misconfigurations in their Kubernetes environments. This is followed by vulnerabilities at 31% and attacks at 13%.

Most common security concerns (Source: Red Hat)

Most common security concerns (Source: Red Hat)

Kubernetes adoption recommendations

The challenges associated with implementing Kubernetes into existing ecosystems will vary from case to case. Still, there are a few general recommendations that can help any organization considering the technology.

Roll out in increments. Start adoption with a single process and a single team. Incrementally roll out Kubernetes to the rest of the organization, learning and iterating along the way. This minimizes the risk of downtime or any other errors, while enabling teams that are early adopters to spread best practices throughout the organization. This very approached worked well for GitLab, for example.

Learn from other adopters. Use proven techniques and best practices from early adopters, which successfully integrated Kubernetes into their business processes and are now reaping benefits. Once an organization becomes familiar and more knowledgeable about container orchestration, adjusting and reconfiguring it becomes more manageable.

Follow security best practices. Out-of-the-box Kubernetes is optimized to make things work, but not necessarily to work in a secure manner. Similar to any other software, there are a number of guidelines that can help to secure Kubernetes deployments. (In this blog post, we share best practices and compliance checks essential to preventing misconfigurations and securing clusters.)

Consider using production-ready solutions. Given the complexity involved in building the platform from scratch, using managed solutions by industry-leading providers (Microsoft, Amazon, or Google) enables organizations to get started quickly without having to deal with the underlying technology. Ideally, the solution should strike a balance between out-of-the-box features, and how much it can be customized.

Monitor your deployments. It is crucial to be aware of your deployments’ health state among other technical must-haves. Identify which metrics to gather, so it will become possible to prevent failures in advance, facilitate auditing, improve performance analysis, etc.

In the previous year, Kubernetes played a vital role in the digital transformation of multiple organizations. As the container orchestrator matures, we can expect a similar trend for 2022, especially for enterprises focusing on edge computing and machine learning.

Further reading

- Shell Builds 10,000 AI Models on Kubernetes in Less than a Day

- Osaka University Cuts Power Consumption by 13% with Kubernetes and AI

- GitLab Autoscales with Kubernetes and Monitors 2.8M Samples per Second

with assisstance from Yaroslav Goortovoi.