K8s Meets PCF: Pivotal Container Service from Different Perspectives

PKS version 1.3

A year ago, Pivotal released Pivotal Container Service (PKS)—a tool enabling operators to deploy, run, and provision enterprise-grade Kubernetes clusters. Then, we provided an overview of PKS v1.0, investigating hands-on experience with the service.

Recently, Pivotal Container Service v1.3 has been released, adding the latest stable version of Kubernetes, support for Microsoft Azure and NSX-T, and some other features. Versions 1.0 and 1.1 are no longer supported and have already been removed from download options. Version 1.2.6 is fully supported and can be used.

For different projects roles, PKS poses different value and interest, so people in these roles ask a variety of service-related questions. Therefore, we’ve decided to approach PKS from different perspectives in an attempt to answer the questions that may bother project managers, cloud admins, platform operators, DevOps engineers, and business logic developers.

High-level

From a project manager perspective, PKS is Pivotal’s way to use and deploy a Kubernetes cluster. The service can be used for rapid and simple provisioning of Kubernetes clusters, which can be used as a platform for containerized workloads, both stateful and stateless. PKS can be employed anywhere Kubernetes is useful: as the first step to microservices, as a possibility to run containerized applications without additional efforts, as a storage for stateful services, etc. Pivotal Container Service is the company’s production-grade offering with three key “S” for an enterprise: security, simplicity, and support.

PKS from a project’s manager perspective

PKS from a project’s manager perspective

Configuration and administration

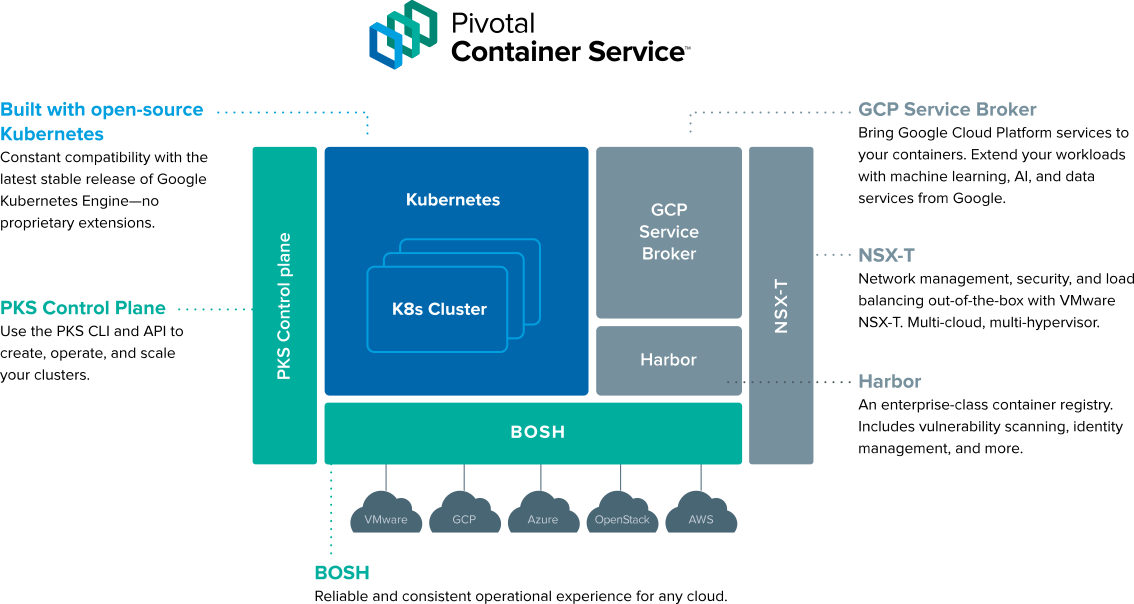

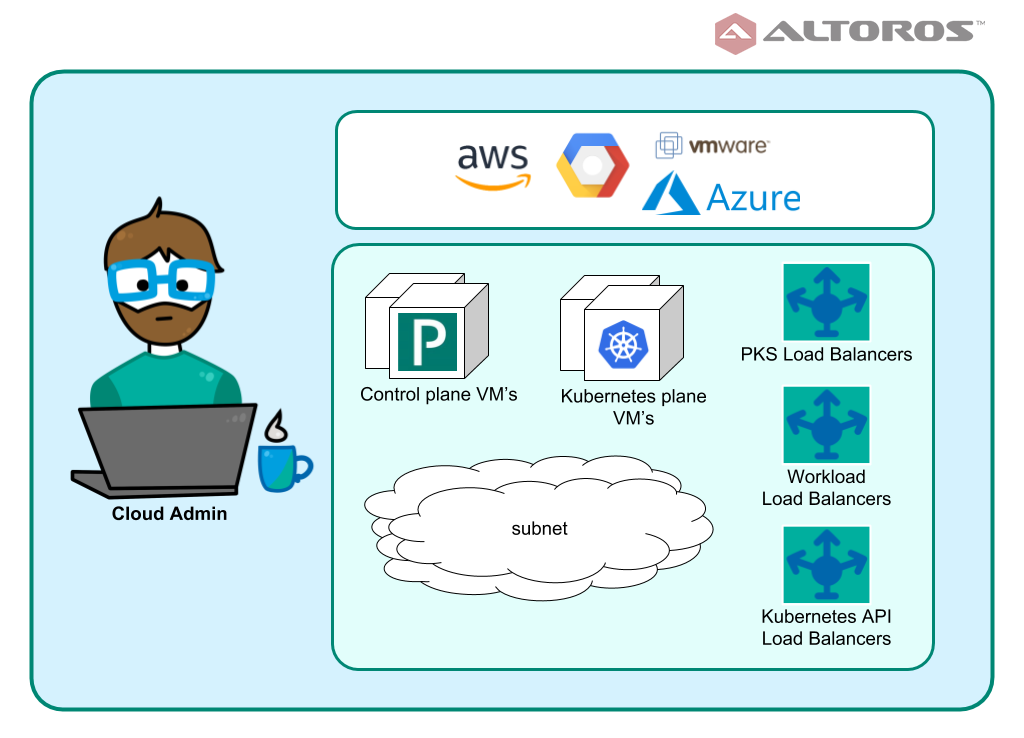

From a cloud administrator perspective, like any other system or project, PKS is a set of network and compute resources. It is available for all major public clouds: Google Cloud Platform, AWS (supported from v1.2), Microsoft Azure (supported from v1.3), and VMware vSphere (either with NSX-T or without it). Network setup and routing schema are flexible and vary depending on deployment needs.

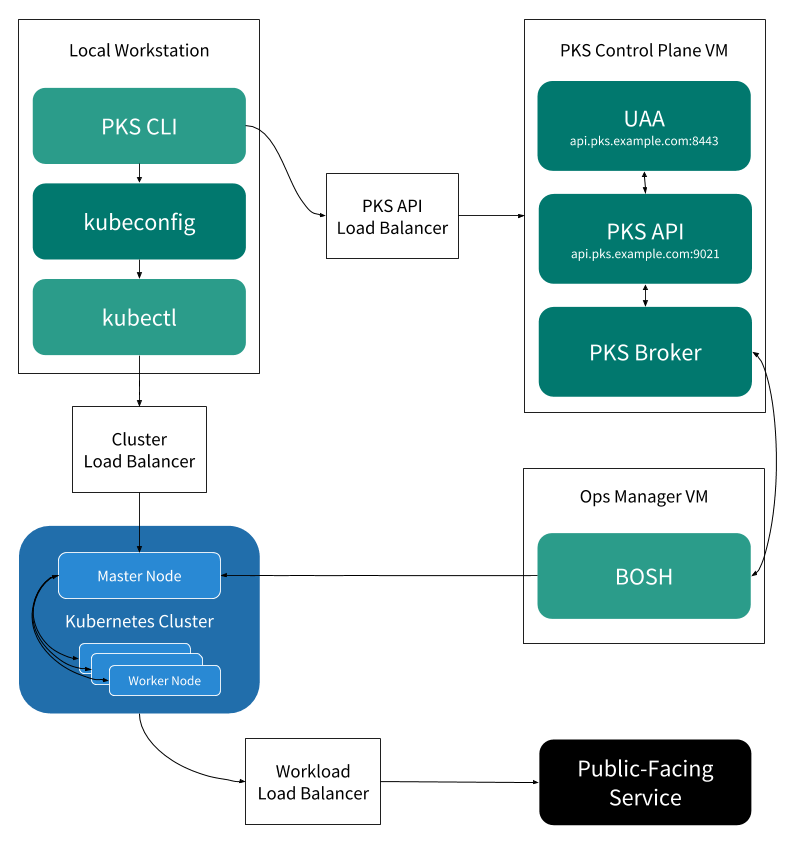

The architecture of PKS (Image credit)

The architecture of PKS (Image credit)To create a PKS instance on Google Cloud Platform, AWS, or Microsoft Azure, a cloud admin manually sets up and configures networking and load balancing. A typical PKS installation will need three networks (pks_subnet, public_subnet, and services_subnet) with a multi-AZ setup to enable high availability.

There are three types of a load balancer in a PKS deployment:

- PKS API Load Balancer is needed to connect to the PKS API responsible for deploying Kubernetes clusters.

- Cluster Load Balancer establishes traffic routing to a Kubernetes cluster. In case of VMware vSphere, the configuration of load balancing is automated through the integrated NSX-T solution, which manages traffic routing via the Network Address Translation.

- Workload Load Balancer helps to access containers, which are run on a Kubernetes cluster. In case of GCP, AWS, or VMware vSphere integrated with NSX-T Kubernetes, a master can automatically create this load balancers.

An example of a PKS networking schema (Image credit)

An example of a PKS networking schema (Image credit)By default, PKS offers three templates for service plan configuration. Still, these templates are not predefined, so a platform operator has to manually assign resources for Kubernetes clusters. Usually, such templates are already predefined based on the average amount of resources needed for “small,” “medium,” or “large” deployments.

With manual resource assignment, an operator relies on his/her experience and intuition solely, so there may be either insufficient or abundant resources. The resources will be created and managed by BOSH—no additional action from a cloud administrator is needed—but quotas, budget, and compute resources amount should be considered.

Pivotal provides some automation for managing PKS-related cloud resources. For AWS, Google Cloud Platform, and Microsoft Azure, Terraform templates are available through the Pivotal Network to help with networking configuration and BOSH provisioning.

PKS from a cloud administrator’s perspective

PKS from a cloud administrator’s perspective

Operations

From a platform operator perspective, PKS is a Pivotal tile that can be downloaded, configured, and deployed via Ops Manager. Operators prefer secure and easy-to-use systems, so several sub-perspectives can be investigated in this regard.

Networking. Using NSX-T, PKS allows for managing software-defined virtual networks and ensures the security of a Kubernetes network. Being purely a vSphere component, NSX-T is not available for Google Cloud Platform, though one may employ Flannel for GCP, AWS, or Azure as an option.

Security. For Pivotal CF, it is recommended to install PKS on a separate instance of Ops Manager to ensure better security. However, it means that operators will have to manage a number of separate environments—thus, increasing operational expenses. Both minor and major versions of PKS have updated stemcells with latest security patches

and updated libs.

The PKS tile has an integrated UAA server that can be employed to manage user access. This can be applied to internal UAA users, if the PKS infrastructure is not integrated to a corporate ecosystem. UAA is integrated with LDAP to grant access to a specified group of users.

pks.clusters.admin: users with this scope have full access to all the clusters.pks.clusters.manage: users with this scope can only access clusters that they’ve created.

You can check out a demo on creating and managing users in PKS UAA via the UAA CLI in our previous PKS evaluation.

PKS is also integrated with Harbour—an enterprise-grade container registry that enables vulnerability scanning, identity management, activity auditing, etc.

Automation. The PKS tile installation and upgrade can be automated via the Ops Manager API, using either CLI tooling or a web UI. CI/CD tools such as Concourse can be implemented to keep a deployment up-to-date and minimize efforts, if a clone or recreate is necessary.

From version 1.2, PKS has a multi-master setup, so Kubernetes clusters provided by PKS can be highly available.

Integrated products and services. Apart from NSX-T and Harbour, PKS is also integrated with vRealize Operations Manager, vRealize Log Insights, and Wavefront to enable a fully fledged on-premises deployment for VMware vSphere.

PKS from an operator’s perspective

PKS from an operator’s perspective

Development

From a DevOps engineer or platform developer perspective, PKS is an API server closely integrated with UAA and on-demand service broker, which can be accepted via the PKS CLI. This service broker will provide an on-demand Kubernetes cluster, the capacity of which is dictated by a selected service plan.

This cluster can be accessed and manipulated like any other Kubernetes cluster via the kubectl CLI, if appropriate permissions are set up by an operator. PKS makes use of a stable Kubernetes version, and all the features of this version can be transparently engaged. The PKS CLI can be used to provision, deprovision, and scale clusters according to daily needs. Check out our demo on how to use the PKS CLI to get cluster credentials.

PKS supports CredHub to manage credential generation, storage, and access in a secure manner. It is also possible to use Helm, a package manager, for Kubernetes apps running on Pivotal Container Service.

PKS from a platform developer’s perspective

PKS from a platform developer’s perspectiveFrom the perspective of a traditional business logic developer, PKS is transparent. Developers are able to create services ready for containerization with no need to worry about an underlying platform. If the services developed are containerized, scalable, and compliant with the requirements of a container orchestrator, they can be easily run on PKS.

PKS from the perspective of a business logic developer

PKS from the perspective of a business logic developer

Conclusions

Here, we try to summarize what perks an installation of PKS provides for a Kubernetes cluster.

Reliability. PKS relies on BOSH and Ops Manager for deploying Kubernetes clusters. BOSH provides self-healing, canary deployments, and some HA features by default.

Cloud independence. PKS itself provides a uniform experience for any cloud with the possibility to migrate workloads and operation procedures in a similar manner.

Automation. PKS is deployed as a tile to Ops Manager, which features an API for automating a deployment/upgrading circle. Pivotal provides Terraform templates to help cloud administrators with resource automation.

Simplicity. Platform operators use such well-known tools as Ops Manager to deploy, configure, and update the PKS tile. Ops Manager provides an API and the CLI tooling for automation, with a single command needed to configure the kubectl context to access a cluster. Under the hood, PKS is a typical Kubernetes with a possibility to freely use the kubectl CLI.

Flexibility. Kubernetes clusters provided by PKS can be reconfigured. A number of master and worker nodes can be selected; custom workloads are also supported now. BOSH-deployed clusters enable OS-level access to Kubernetes VMs in case deep debugging is needed.

Support. Pivotal provides sufficient support for its services.

Security. PKS supports the current version of Kubernetes—once Kubernetes is upgraded, PKS synchronizes with the changes, too. BOSH stemcells (used as a deployment base) have security patches in place and are frequently updated. PKS supports UAA, which is integrated with a LDAP server to provide access to users with appropriate rights. NSX-T can help in granular ACL configuration for a vSphere deployment. Harbor integration helps to enable vulnerabilities scanning and security review for software packages images.

Backup and recovery. Pivotal provides tools and processes to back up and restore a PKS installation. The BBR tool, which is widely used for Cloud Foundry backup and recovery, can be employed for PKS, as well.

Further reading

- Evaluating Pivotal Container Service for Kubernetes Clusters

- Enabling High Availability and Disaster Recovery in Kubernetes

- Kubernetes Cluster Ops: Options for Configuration Management

About the authors

Yauheni Kisialiou is a Cloud Foundry DevOps Engineer at Altoros. He specializes in enterprise cloud platform support and automation, using his 8 years of experience in cloud administration and building cloud systems. Yauheni is highly adept at creating BOSH releases and using BOSH for deploying Cloud Foundry and other services. Now, he is part of the team working on container orchestration enablement for a leading insurance company.

Yauheni Kisialiou is a Cloud Foundry DevOps Engineer at Altoros. He specializes in enterprise cloud platform support and automation, using his 8 years of experience in cloud administration and building cloud systems. Yauheni is highly adept at creating BOSH releases and using BOSH for deploying Cloud Foundry and other services. Now, he is part of the team working on container orchestration enablement for a leading insurance company.

Andrei Krasnitski is a Cloud Foundry Engineer at Altoros. He has 5+ years of experience in cloud infrastructures and platforms automation, enterprise-level service integrations, and cloud environment troubleshooting. Andrei is building and supporting Cloud Foundry environments for Altoros’s enterprise customers.

Andrei Krasnitski is a Cloud Foundry Engineer at Altoros. He has 5+ years of experience in cloud infrastructures and platforms automation, enterprise-level service integrations, and cloud environment troubleshooting. Andrei is building and supporting Cloud Foundry environments for Altoros’s enterprise customers.

with assistance from Sophia Turol and Alex Khizhniak.