Three Approaches to Testing and Validating TensorFlow Code

The rigors of testing

Validating TensorFlow code may pose certain difficulties. First, one needs to trample through the herds of tensor values, which are multi-dimensional arrays of data. In TensorFlow, computations are represented as dataflow graphs, and they are hardly an easy nut to crack. Using TensorBoard for graph visualization may give you the understanding of how neatly a graph is arranged. However, a single graph may have a hundred of nodes (units of computation) and edges (the data consumed/produced by a computation).

Employing general-purpose debuggers may not be an option due to the peculiarities of a nonlinear TensorFlow code structure. Anyway, you may need to provide specifications for a debugger what this or that piece of code is supposed to do, so the debugger can actually distinguish between the wrong and right.

At the recent TensorFlow meetup in London, Yi Wei of Prowler shared a set of techniques he uses to write and debug TensorFlow code with no bloody efforts at stake.

Assertion techniques to consider

Generally, assertions are used to determine that a predicate (a true-false expression) is always true at a given point in code execution. Providing assertions to the code enabled the system to evaluate the predicate, and if it is false, this may be a signal for a bug. This way, assertions help to check if a system operates as it is meant to.

The set of assertion techniques developed by Yi to validate the correctness of TensorFlow code includes:

- tensor shape assertions to validate data shapes

- tensor dependency assertions to validate graph structure

- tensor equation assertions to validate numerical calculations

Approach #1: shape assertions

To check the shape of the introduced tensor—i.e., all the elements in each dimension—you can write an assertion. In case a tensor shape is different to what you defined in the assertion, a violation will pop up.

“It sounds like a stupid child, but once you start writing those things, oh boy, you’ll realize how often you’re wrong about the assumptions of the shapes. And the TensorFlow broadcasting mechanism is just going to hide all these problems and pretend the code is going to work well.” —Yi Wei, Prowler

You can make use of the assertion sample below.

prediction_tensor = q_function.output_tensor assert prediction_tensor.shape.to_list() == [batch_size, action_dimension] target_tensor = reward_tensor + discount * bootstrapped_tensor assert target_tensor.shape.to_list() == [batch_size, action_dimension] loss_tensor = tf.losses.mean_squared_error(target_tensor, prediction_tensor) assert loss_tensor.shape.to_list() == []

Approach #2: the TensorGroupDependency package

Visualizing a graph via TensorBoard is all cool, still it comes at a price as the graph may have hundreds of nodes and edges, and you may not even know where to start from. So, how to verify tensor dependencies are exactly what they are supposed to be?

Yi and his team developed the TensorGroupDependency package, written in Python. The package enables users to group tensors into nodes, visualize only dependencies of the introduced tensors, and automatically generate graph structural assertions.

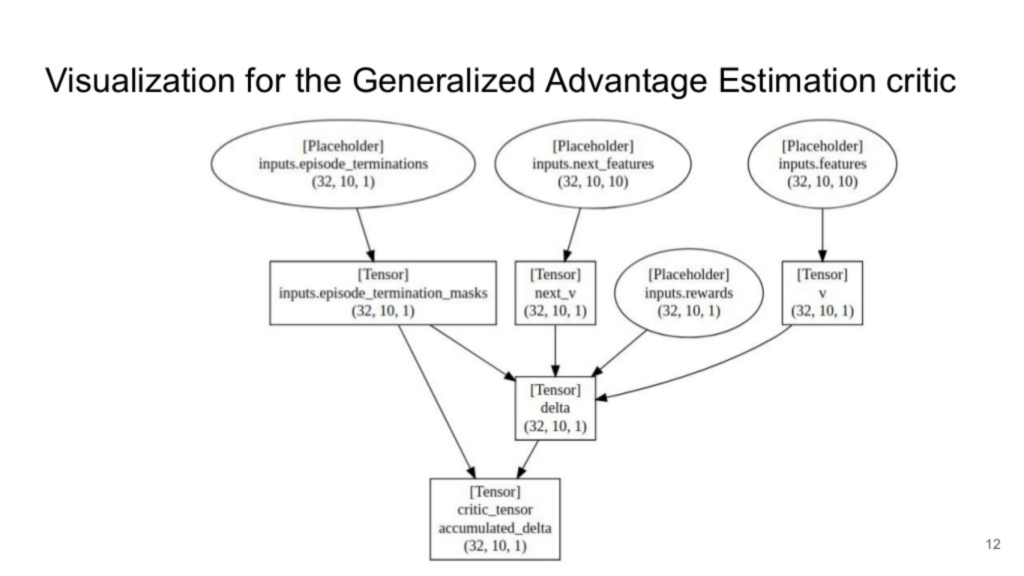

A visualization of the Generalized Advantage Estimation critic via

A visualization of the Generalized Advantage Estimation critic via TensorGroupDependency (Image credit)With the package, the graph also shows the shape of a tensor. This way, you get an overview of all the shapes, as well as their flow and transformations. Dependencies are represented as edges, and you must know for sure why each edge exists. If you can’t explain why there is an edge, it usually means a bug.

“Every edge exists, because you wired up the tensor graph like this. Does this wiring match what you think it should do?” —Yi Wei, Prowler

As already mentioned, the package automatically generates assertions to describe the graph structure. By putting these assertions into your code, you enable automatic check of all the future executions.

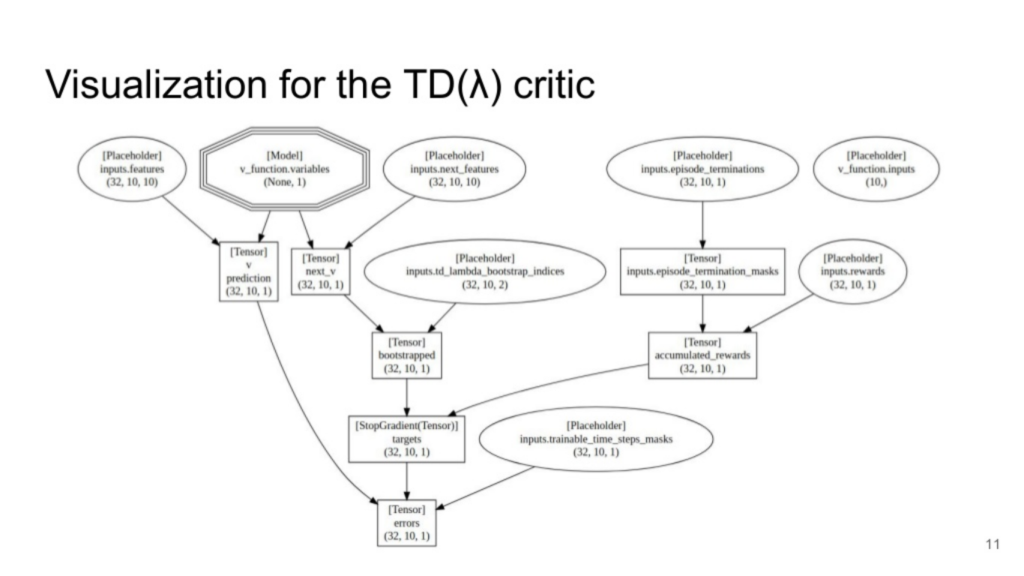

A visualization of the TD(λ) critic method via

A visualization of the TD(λ) critic method via TensorGroupDependency (Image credit)TensorGroupDependency is not generally available yet, but it is planned to open source the package soon.

Approach #3: equation assertions

Once you’re done with validating the correctness of tensor dependencies, you may want to verify these dependencies perform the numerical calculations appropriately. For the purpose, you need to proceed with tensor equation evaluations:

- add the tensors to

session.runin each optimization step for each equation in your algorithm - write the same equation in NumPY with the tensor evaluations

- make sure the expected value corresponds with that established in the algorithm definition

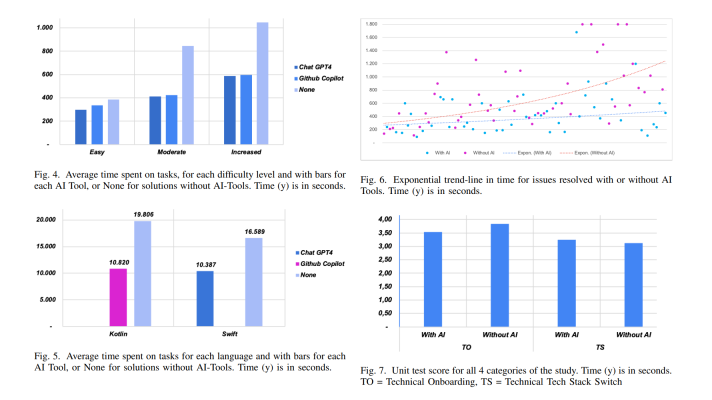

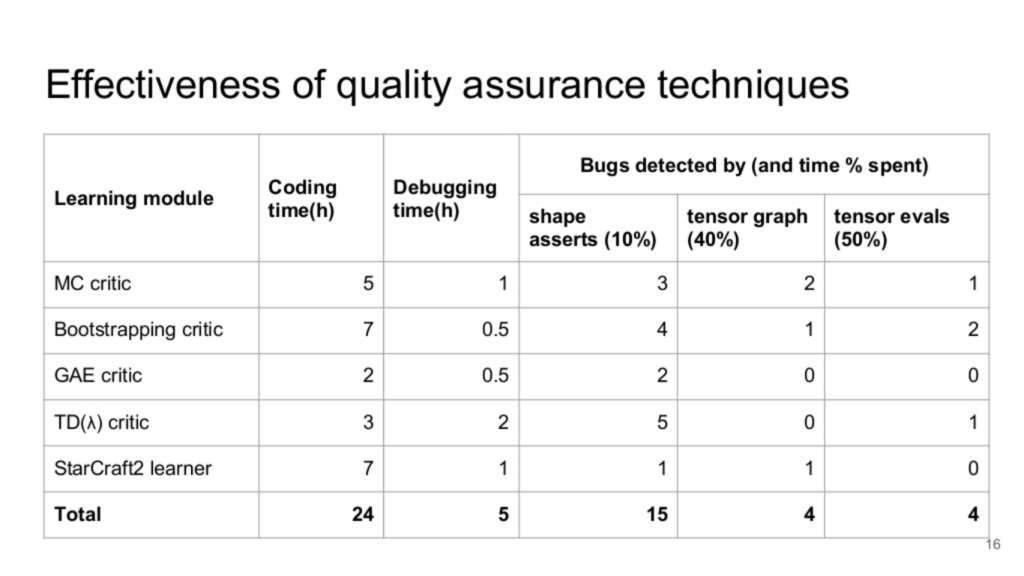

Yi shared some results of applying the assertion techniques to the TensorFlow-based learning modules his team was developing. On writing the code behind the modules the team spent 24 hours. In the course of five hours, the team was able to detect 23 bugs in total.

A summary table of applying assertion techniques (Image credit)

A summary table of applying assertion techniques (Image credit)Yi wrote blog post where he reveals more details about the results and the TensorGroupDependency package, as well as provides sample code for the assertion techniques.

The nonlinearity of TensorFlow code structure makes it difficult to use general-purpose debuggers. In addition to the suggested assertion methods, one can employ a native debugger available through TensorFlow. Though, due to the continuous evolution of machine learning models, you may need to deviler customizations to complement the functionality of the TensorFlow debugger.

Want details? Watch the video!

Related slides

Further reading

- Visualizing TensorFlow Graphs with TensorBoard

- Logical Graphs: Native Control Flow Operations in TensorFlow