Spotify Runs 1,600+ Production Services on Kubernetes

Migrating to Kubernetes

Founded in 2006, Spotify has grown into the world’s largest music streaming service provider with more than 365 million active users per month. As an early adopter of microservices and Docker, the company had containerized microservices running across a fleet of virtual machines in 2014. To streamline container management, Spotify developed and open-sourced Helios, its very own container orchestration system.

By 2016–2017, the organization completed a migration from on-premises data centers to Google Cloud. During this time, the Spotify team realized that it needed the support of a much larger community to improve even further as noted by Jai Chakrabarti, Director of Engineering at Spotify.

Jai Chakrabarti

“Having a small team working on the Helios features was just not as efficient as adopting something that was supported by a much bigger community. We saw the amazing community that had grown up around Kubernetes, and we wanted to be part of that. We wanted to benefit from added velocity and reduced cost and also align with the rest of the industry on best practices and tools.”

—Jai Chakrabarti, Spotify

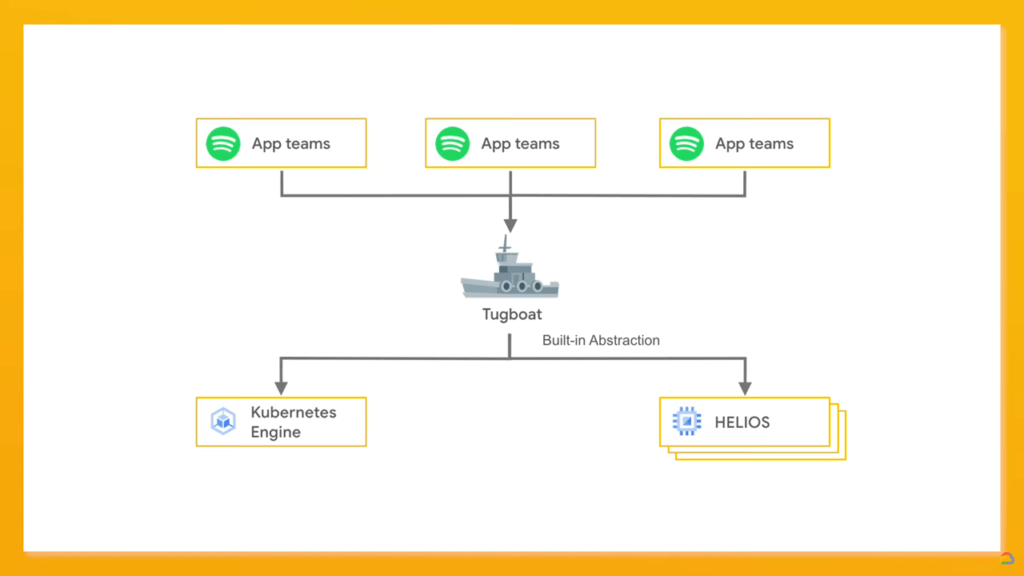

In 2018, the company dealt with core technology issues necessary for migrating from Helios to Kubernetes. To resolve most of the issues, the Spotify team relied on Tugboat, a system on top of Helios that provides an interface and APIs. According to Matt Brown, Staff Software Engineer at Spotify, almost every service was already connected to Tugboat, so it was a simple matter of adding Kubernetes as another option for deployment.

Migrating to Kubernetes with Tugboat (Image credit)

Migrating to Kubernetes with Tugboat (Image credit)

Matt Brown

“We already have almost every service at Spotify talking to this API. Basically, they can just tell Tugboat that I have a new version of my service, please go deploy it out to the world. We realized that if we just add Kubernetes as another option to the system, we will have everything already integrated with it.”

—Matt Brown, Spotify

Migration to Kubernetes accelerated by 2019 with over 150 services successfully transferred. One of the largest services running on Kubernetes greatly benefits from autoscaling and receives more than 10 million requests per second. However, the increase in scale led to a new challenge—managing multiple services was getting increasingly complicated.

Services management complexities at scale

Matthew Clarke

According to a blog post by Matthew Clarke, Senior Infrastructure Engineer at Spotify, the widespread adoption of Kubernetes has given rise to the concept of DevOps. Responsibilities of developers have expanded to include tasks previously performed by operations teams.

“When I first started using Kubernetes, cluster admins and service owners were one and the same: the people who built a cluster were usually the same people who owned the services that ran in the cluster,” explained Matthew. “That’s not how it is today. As Kubernetes has achieved widespread adoption, there has been a shift in Kubernetes usage, as well as a shift in how Kubernetes is managed at the organization level.”

The trend now is that companies have a separate infrastructure team that builds and maintains clusters for developers and services owners. While this is a better experience for developers, it comes at a cost as the organization grows.

“When your deployment environment reaches this kind of complexity and scale, the maintenance overhead for service owners increases. It forces them to use multiple kubectl contexts or multiple UIs just to get an overall view of their system. It’s a small overhead—but adds up over time—and multiplies as service owners build more services and deploy them to more regions. Just checking the status of a service first requires hunting for it across multiple clusters. This can reduce productivity (and patience) company-wide.” —Matthew Clarke, Spotify

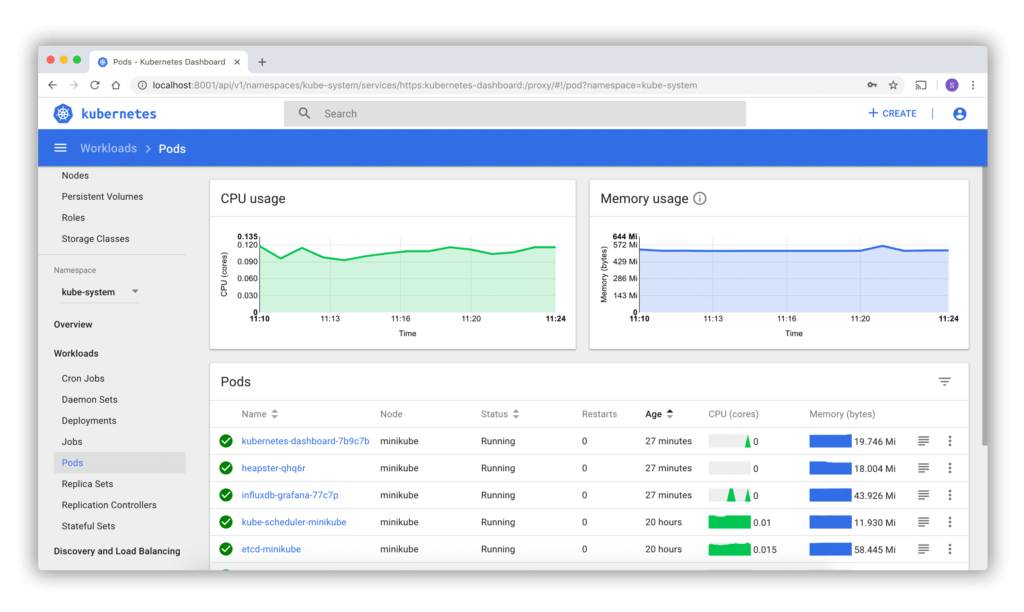

To streamline the management of multiple services, the Spotify team initially relied on developer tooling. However, they quickly realized that the available tools were not ideal due to the following factors:

- Cluster-wide permissions were required.

- Everything on a cluster was displayed, causing information bloat.

Too much information in the default dashboard (Image credit)

Too much information in the default dashboard (Image credit)Unable to rely on existing tools, the Spotify team built a custom Kubernetes plug-in for Backstage, the company’s open-source platform for building developer portals.

Managing services across clusters

Prior to being open-sourced in 2020, Backstage was initially developed by Spotify in 2016 and was used internally to unify infrastructure tooling, services, and documentation under a single interface. Backstage provided service owners with an overview where pertinent details about a service were shown, including what it does, its APIs, its technical documentation, etc.

Stefan Ålund

Since adopting Backstage, the company has seen a 55% decrease in onboarding times for engineers as noted by Stefan Ålund, Principal Product Manager at Spotify.

“Over 280 engineering teams inside Spotify are using Backstage to manage 2,000+ back-end services, 300+ websites, 4,000+ data pipelines, and 200+ mobile features.” —Stefan Ålund, Spotify

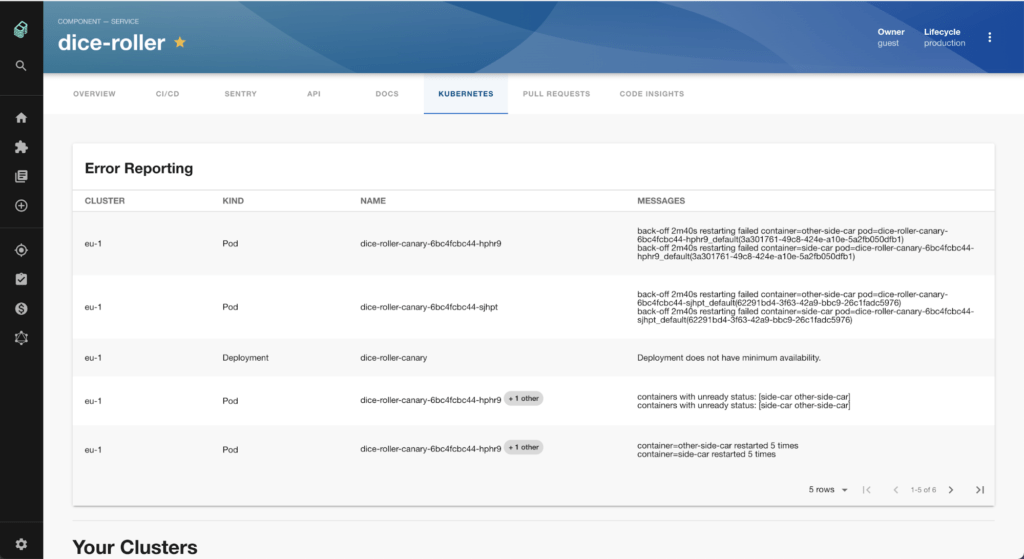

By expanding Backstage with a Kubernetes monitoring tool, developers can check on their services regardless of how or where they are deployed. Backstage can be configured to search for a service across multiple clusters, and it will then aggregate them into a single interface.

Showing the service’s status regardless of how many clusters it is deployed to (Image credit)

Showing the service’s status regardless of how many clusters it is deployed to (Image credit)“Instead of spending 20 minutes in a CLI trying to track down which clusters your service has been deployed to, you get all the information you need to know at a glance.” —Matthew Clarke, Spotify

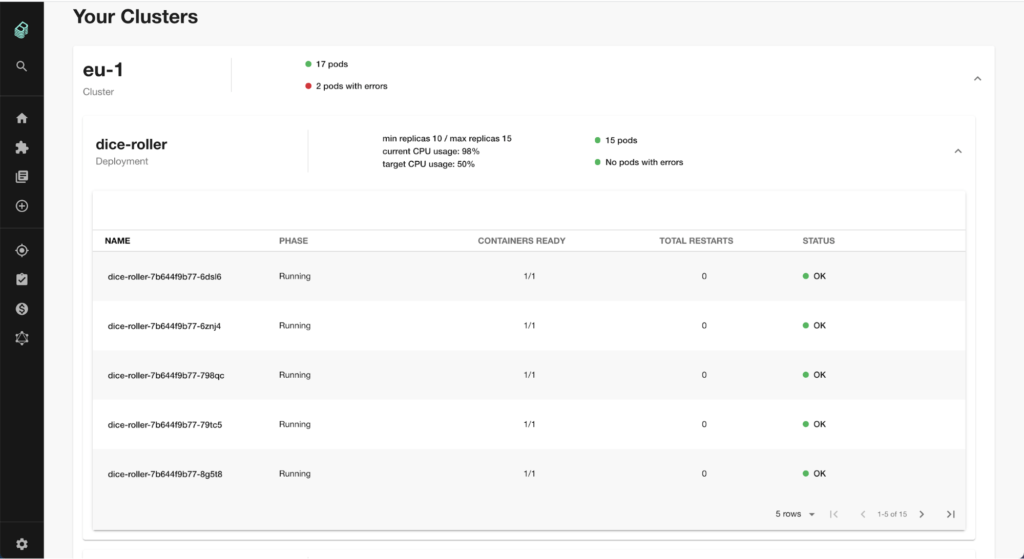

Backstage also automatically finds and highlights errors in Kubernetes resources affecting a service. This removes the need to run multiple kubectl commands to troubleshoot a problem.

Aggregated error reporting (Image credit)

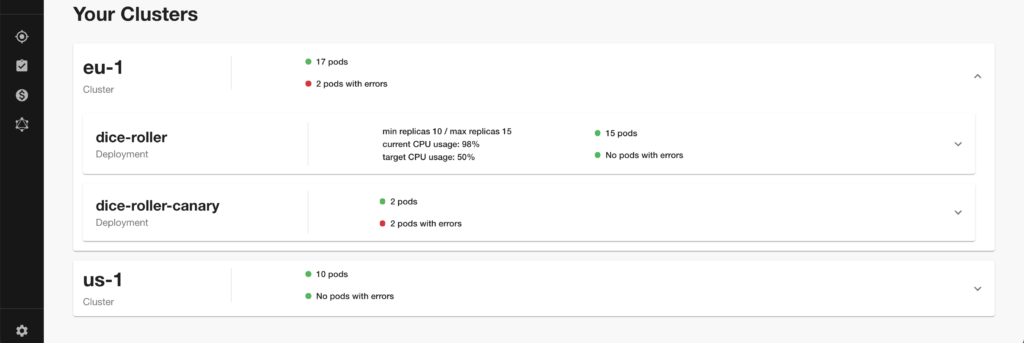

Aggregated error reporting (Image credit)Finally, Backstage shows the status of autoscaling limits, making it easier to see when a service is close to a limit, as well as how horizontal scaling is distributing the load across multiple clusters.

Autoscaling limits on display (Image credit)

Autoscaling limits on display (Image credit)Backstage is cloud-agnostic and communicates directly with the Kubernetes API. Regardless of how or where an organization runs Kubernetes, the tool provides a similar view for all deployments.

As of early 2021, Spotify has 14 multitenant production clusters in three regions. The company has over 1,600 production services running on Kubernetes with around 100 microservices being added per month, all being monitored with Backstage.

Additionally, Spotify has seen a 231% production increase on Kubernetes. Specifically, teams that run on Kubernetes are able to perform 6.26 production deployments per week compared to 1.89 deployments per week for teams not on the platform.

Want details? Watch the videos!

In this video, Matt Brown talks about Spotify’s migration to Kubernetes.

In this next video, Matthew Clarke demonstrates how to monitor Kubernetes services with Backstage.

Further reading

- GitLab Autoscales with Kubernetes and Monitors 2.8M Samples per Second

- LinkedIn Aims to Deploy Thousands of Hadoop Servers on Kubernetes

- Adobe Migrates 5,500+ Services to Kubernetes

About the experts

Jai Chakrabarti is Director of Engineering at Spotify. He is a technology leader who is passionate about building high-performance teams and solving complex problems. Jai has built systems that scale to hundreds of millions of users, as well as platforms where every microsecond matters. His core technical specialties include distributed systems, core infrastructure, as well as large-scale data processing and mining.

Matthew Clarke is Senior Infrastructure Engineer at Spotify. As a full-stack DevOps engineer, he specializes in open-source technologies, CI/CD, Kubernetes deployments, etc. Prior to Spotify, Matthew served as a developer for Financial Times, CyberSource, and KANA Software. He is a graduate of Queen’s University Belfast with first-class honors.

Stefan Ålund is Product Manager at Volvo Car Mobility. Previously, he was Principal Product Manager at Spotify, where he focused on infrastructure and developer experience. Stefan was involved in the management of Backstage and its internal deployment at Spotify. He also built tools, helping Spotify’s internal developers to move even faster on product features.

Matt Brown is Staff Software Engineer at Spotify. He specializes in Java and the development of web applications. Prior to Spotify, Matt served as a software engineer and consultant for Citrix, Vapps, Nuance Communiacitons, and Viecore.