Cloud Foundry Service Broker for GCP: What’s in It for Machine Learning?

With the development of a service broker to GCP, Cloud Foundry users can now work with BigQuery, Bigtable, Cloud SQL, Cloud Storage, machine learning APIs, PubSub, Spanner, Stackdriver Debugger, and Stackdriver Trace. At Cloud Foundry Summit 2017, presenters from Google and Pivotal focused on machine learning APIs available and live debugging.

A plentiful of machine learning APIs

Colleen Briant is part of a Google team that maintains open-source integrations with the company’s cloud platform. Firstly, she introduced a bunch of machine learning APIs that are available through a service broker to the Google Cloud Platform.

For instance, Translation API, which aids users in dynamically translating text into thousands available language options. This API can be of significant help if one needs to quickly translate a web page or a whole website.

“So, a possible use case for that [Translation API] would be just translating your own website. So, just upload your HTML pages, run them through the API, and out pops a globally accessible website.” —Colleen Briant, Google

Translation API’s documentation

Natural Language API, another one of the Google Cloud API family, allows for analyzing text structure and meaning. With it, one can extract information from text documents, news articles, or blog posts about the subjects of interest. The API proves efficient in understanding sentiment—e.g., the feedback on a product posted on social media—and parsing intent, let’s say, from customer conversations with a call center/virtual assistant.

“So, something you could use this for and at your own company would be to process customer reviews, pick out the ones that are especially positive, and try to highlight those phrases on your next marketing campaign.” —Colleen Briant, Google

Natural Language API’s documentation

Cloud Speech API converts audio to text, distinguishing between 80+ languages. The common capabilities include text transcription—being dictated through an app’s microphone—and voice command-and-control.

“Say, you have a phone system that you route to for customer support and you detect that your user is really struggling to use the system. May be the reason is they are trying to speak a language that your system is not in. So, you take a little audio sample, run it through the API, and try to route it to an agent that can assist them in their native tongue.” —Colleen Briant, Google

Cloud Speech API’s documentation

The newest API to join the Google collection is the Video Intelligence one that extracts metadata from videos, enabling users to search any moment of a video file and find the occurrence of interest and identify its significance. The API allows for annotating those videos stored in the Google Cloud Storage, as well as detecting key nouns entities and the time of their occurrence in the video. Furthermore, one can separate signal from noise by retrieving relevant information (e.g., per frame).

As Colleen put it, one can drive business insights by holding a competition for users, letting them upload videos of interacting with a product. Video Intelligence API will then pick out the moments of product showcasing and collect the snippets, which can be further employed for business needs.

Video Intelligence API’s documentation

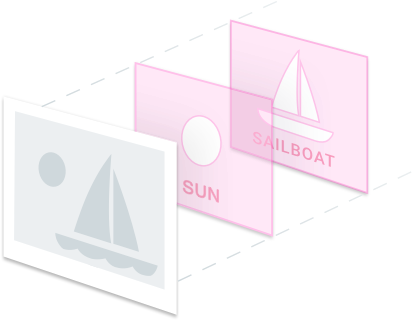

Cloud Vision API is designed to classify images into categories, as well as detect individual objects/faces and recognize printed words in an image. The perks of using this API include:

- building metadata on an image catalog

- moderating offensive/explicit content

- enabling new marketing scenarios via image sentiment analysis

Being a furniture retailer, for instance, one can inspire customers to upload pictures of the products they’ve bought from other retailers and apply pallette detection to compare them against your offer and make suggestions.

Cloud Vision API’s documentation

Just combine it!

As standalone tools, the above-mentioned APIs are simply cool, but the true power surely comes with the combination of them. A good example is Google Translate app, which functionality can be simulated by utilizing Cloud Vision API to get the text out of an image and actually translate it into the necessary language via Translation API.

How Google’s Neural Machine Translation works (Source)

How Google’s Neural Machine Translation works (Source)Colleen also suggested the scenario of applying Cloud Speech and Natural Language APIs to customer voicemails to gather general sentiment and analyze whether they seem happy—and just record their kudos—or need a call back to help out in their situation.

She highlighted the benefits of being able to control Twitter for the tweets related to one’s product. In this case, such tweets could be run through Natural Language API to enable sentiment analysis. The next step would be to check the image for adult/fraud/whatever harmful content via Cloud Vision API. In a nutshell, it would be an easy way to identify “marketable” things to make use of in the next campaign.

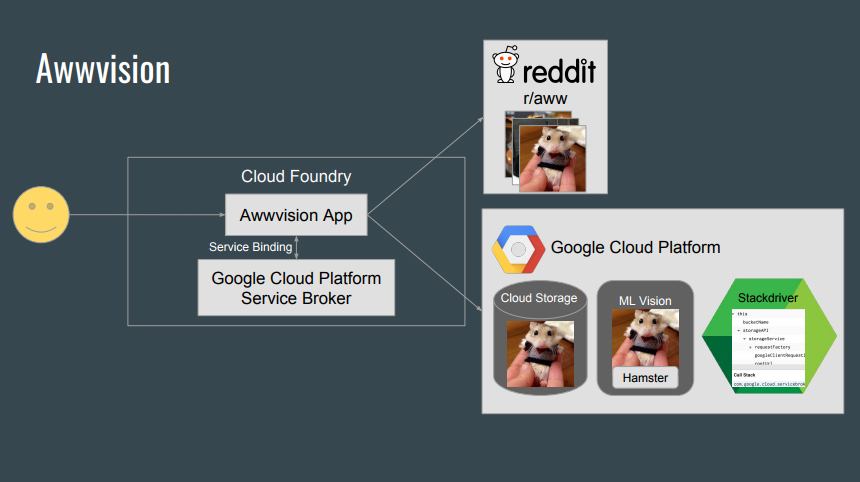

Debugging with Stackdriver

Colleen and Mikey Boldt of Pivotal demonstrated an app that scrapes subreddit aww, which is basically a collection of all sorts cuties and fluffies (puppies, kitties, hamsters, or whatever you’re into). The app extracts images from Reddit into the Google Cloud Storage and runs Cloud Vision API over them. Using the API allowed for:

- Searching where else those very images are available on the web

- Generating the image color pallette

- Making sure the image has no explicit content

- Storing all the image-related information in the JSON format within the API

A reference architecture of a sample app (Source)

A reference architecture of a sample app (Source)A top tag or label—returned by the API—is saved and presented to a user together with the image. You can check out the app in this GitHub repo.

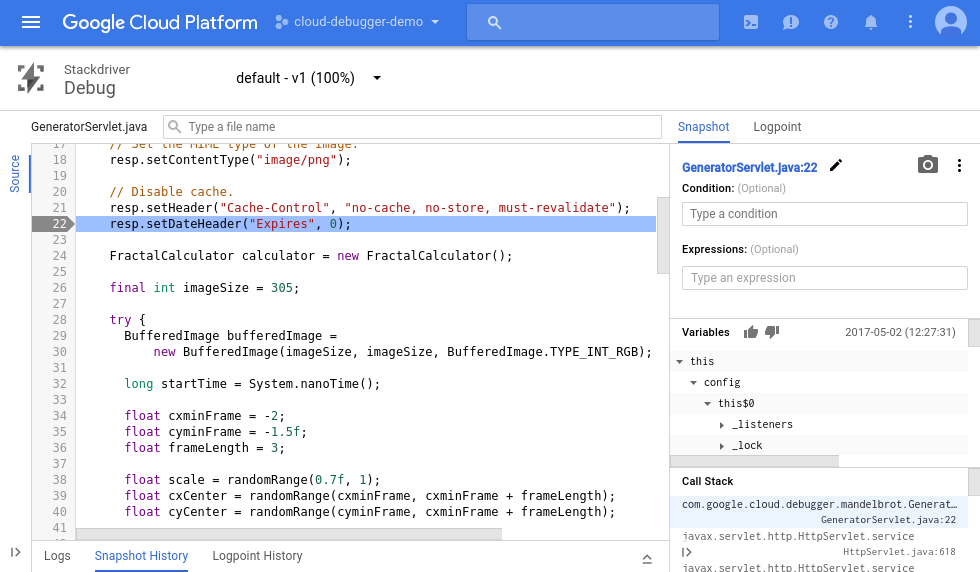

Then, Mikey and Collen showcased how to enable live-debugging with this app already pushed to Cloud Foundry and Stackdriver debugger integrated through a Java buildpack. (According to Mikey, support for Python and Go is on the way.)

Stackdriver debugger, a tool offered as a part of the Google Cloud Platform, allows for inspecting an app’s state at any code location using no logging statements and no need to stop or slow down the application. The debugger’s core features are:

- Debugging in production. As already mentioned, no logging statements need to be added. What one does is snapshotting the app’s state and linking it back to source code. The local variables and call stack at a specific line location are captured the very first time any instance executes the code.

- Multiple source options or no source code at all. Is it even legal? Well, if it happens so you don’t have access to the source code, all you need is a file name and a line number to take the snapshot. What else one can do is upload the source to Debug, connect the Debug to local source files, or use a cloud source repository of choice (e.g., Google Cloud Source Repository, GitHub, or Bitbucket).

- Team collaboration through sharing a debug session.

- Integration into existing developer workflows. On launching the debugger, one can take snapshots directly from logging and error-reporting dashboards, IDEs, and the command line interface.

Stackdriver’s console view (Source)

Stackdriver’s console view (Source)Stackdriver’s debugging functionality is available to Cloud Foundry through a Nozzle and its analogue for Pivotal CF. The debugger, as well as machine learning APIs, is integrated through the GCP Service Broker. (Check out this GitHub repo for the service broker’s integration with open-source Cloud Foundry, as well as documentation for Pivotal CF’s integration.) For technical details, check out the Stackdriver debugger’s documentation.

Want details? Watch the video!

Table of contents

|

You can also watch a session by Google’s Jeff Johnson, covering how to monitor Cloud Foundry infrastructure and apps with Stackdriver and BigQuery.

Related reading

- A Broad Spectrum of TensorFlow APIs Inside and Outside the Project

- The Magic Behind Google Translate: Sequence-to-Sequence Models and TensorFlow

- TensorFlow for Recommendation Engines and Customer Feedback Analysis

About the experts