How to Avoid Typical Mistakes Using Amazon Web Services

Executive summary

According to a recent study by Microsoft, about 30% of companies plan to integrate cloud computing into their infrastructures within the next two years. The tendency is not hard to explain. Another survey by Avanade, a global IT consultancy, revealed that four out of five IT managers and executives believe their existing internal IT systems are too expensive. In the current economic situation, their costs are even more increasing.

Photo hosting service SmugMug saved $1,000,000 in 7 months with cloud computing.

What makes so many companies consider migrating to the cloud is that cloud computing, let alone its evident flexibility and scalability, enables companies to save dramatically. SmugMug, a photo hosting service, claims to have economized $1,000,000 just in seven months after engaging in the cloud. Thousands of companies followed SmugMug craving for the same or even higher success.

The increasing demand has lead to the growing number of related web service providers, as well, with Amazon Web Services becoming the most popular one for the richest functionality and highest safety.

When rushing to the cloud, many IT executives forget about the new challenges, which makes it harder for them to get all the benefits of cloud computing. Our experience in the cloud has helped us to figure out the most common challenges companies face and mistakes they frequently make. This handbook is here to assist you in solving these issues.

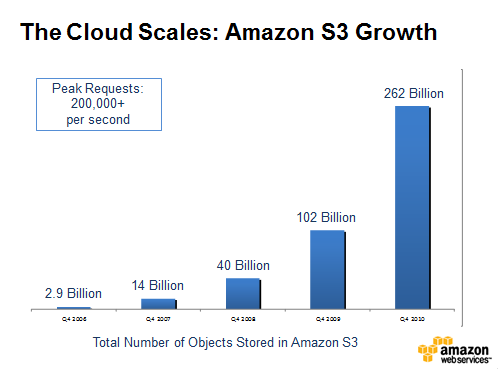

Amazon S3 growth during 2008–2010 (Image credit)

Amazon S3 growth during 2008–2010 (Image credit)

Cloud computing: a new name for an old good thing?

Cloud computing is evolution of three popular IT business trends: infrastructure as a service (IaaS), platform as a service (PaaS), and software as a service (SaaS). Main features of cloud computing include:

- It is run on the web (“in the cloud”).

- It makes use of outsourced and shared infrastructure, which is known as the cloud because of its complexity, but not quite evident.

- It is commonly offered as an on-demand service, meaning that customers pay only for their usage of the service, not the service itself, and delegate all maintenance issues to the cloud provider.

- It offers scalable dynamic resources depending on the current application’s requirements. There is no need to allocate much memory for a simple application, and, on the other hand, any additional resources can be drawn in anytime for a complex dynamic app.

- Usage of cloud computing can be measured and billed exactly for the amount used. This is probably the most adequate method considering the scalability of resources and variable usage intensity. You do not need to overpay!

Representing a new approach to information services delivery, cloud computing is beneficial in a variety of ways:

- Cloud computing offers flexibility, making it possible to access information from anywhere. The information is not stored on a local server, it is stored on the web (“in the cloud”).

- Cloud computing is easy to implement, getting up and running takes much less time than ever before.

- Cloud computing is cost-effective, as you pay for the service incrementally—the price depends only on your usage!

- With cloud computing, there is no need to worry about keeping your software up to date, or constantly upgrading your hardware. Everything is done by the cloud provider for free.

- Cloud computing is safe. Service providers back up their data, so even if one or two servers crash, this does not result in data loss. Disaster recovery costs with cloud computing are also about twice lower virtue to cost-effective virtualization.

16% of American companies plan to migrate to the cloud within the next 12 months. (Source: RSA)

These and other considerations explain the 30 percent increase that, according to RSA, cloud computing enterprise usage has seen recently. A survey of American companies by RSA has also revealed that 16% of businesses plan to migrate to the cloud within the next 12 months.

However, like any other technology, cloud computing brings in its own challenges; and in order to stay effective, companies need to be prepared for them.

Four common challenges of cloud computing

Companies that go for cloud computing without proper preparation are likely to run into a number of obstacles where least expected. Here are some of the most typical challenges:

#1. Different standards

Major cloud computing providers, such as Amazon, IBM, Cisco, Salesforce.com, Microsoft, and Google, have come up with their own standards of cloud computing, followed by a considerable number of smaller providers.

While the range of standards to choose from may seem a good thing, you will no longer think so

when you need to move data from one cloud to another or set up two applications in different clouds to interact. The cloud is easy and flexible as long as you are within, but just as you start working with another cloud, nothing is simple anymore.

Currently, a universally accessible cloud computing application is more of a dream. So, one must work hard to make data transfer seamlessly between the clouds.

#2. Caring about security

According to IDC Enterprise Panel, about 75% of IT executives expressed concern about security issues with cloud computing. Like getting involved into any other outsourcing relationship, cloud computing means entrusting your data to a third party. Hence, all the security concerns about data are also handed over to the service provider. Can companies be sure that the cloud will take good care of their data?

Disaster recovery costs with cloud computing are twice lower.

The system of user authentication and authorization used by a cloud computing service provider

should minimize the risk of data getting in the wrong hands. Furthermore, since a cloud is multi-tenant, it is pivotal that a service provider can guarantee faultless data segregation from other

customers. The typical way to segregate data is to encrypt it, but, according to Gartner, “encryption accidents can make data totally unusable, and even normal encryption can complicate availability.”

Another question is whether it is secure to transfer data to and from the cloud. All the transfers should exclude the slimmest chance of interception. Then, what happens when a cloud computing service user removes some of their data? Basic security requirements have it that the data deleted must be physically removed from the cloud’s servers immediately.

One more potential source of risk is today’s erratic economics. If the service provider should

become bankrupt or get swallowed up by a bigger company, what is to become of the data on its servers?

A good cloud computing provider should be able to address these and other questions that may arise. Moreover, they should be open about all matters that are nearly related to their customers. A company that has its data in the cloud has the full right to know where this data is stored (while in practice companies often do not even know in which country the servers are located), or to carry out an investigation of inappropriate or illegal activity in the cloud.

#3. Application performance

There are two aspects that may limit an application’s performance in the cloud.

First, it is necessary to realize that applications in the cloud may run slower than on a local

machine. The very idea of virtualization implies certain performance penalties, so IT executives

should not be surprised when they notice a 20% or even greater decrease in application performance in the cloud compared to the local environment. However, with some applications, the slowdown in performance will be practically unnoticeable.

There are a lot of factors that determine how an application runs in the cloud, such as application type, workload type, hardware, and others. It also matters that with cloud computing, you cannot rely on homogeneous environment. The cloud is, in fact, quite heterogeneous: different CPUs, different amounts of memory, etc. Being aware of how an application runs in the cloud is crucial for being realistic about your overall performance.

Another virtualization issue is that the maximum amount of memory available on any single virtual machine is 15.5 GB, so far. While this would probably suffice for most needs and you still can unite the resources of several virtual instances, those applications that use more memory on local machines may not run smoothly on a single virtual machine in the cloud. Although it is likely that virtualization vendors such as VMware and Citrix may increase such memory limit in the future, just keep that in mind.

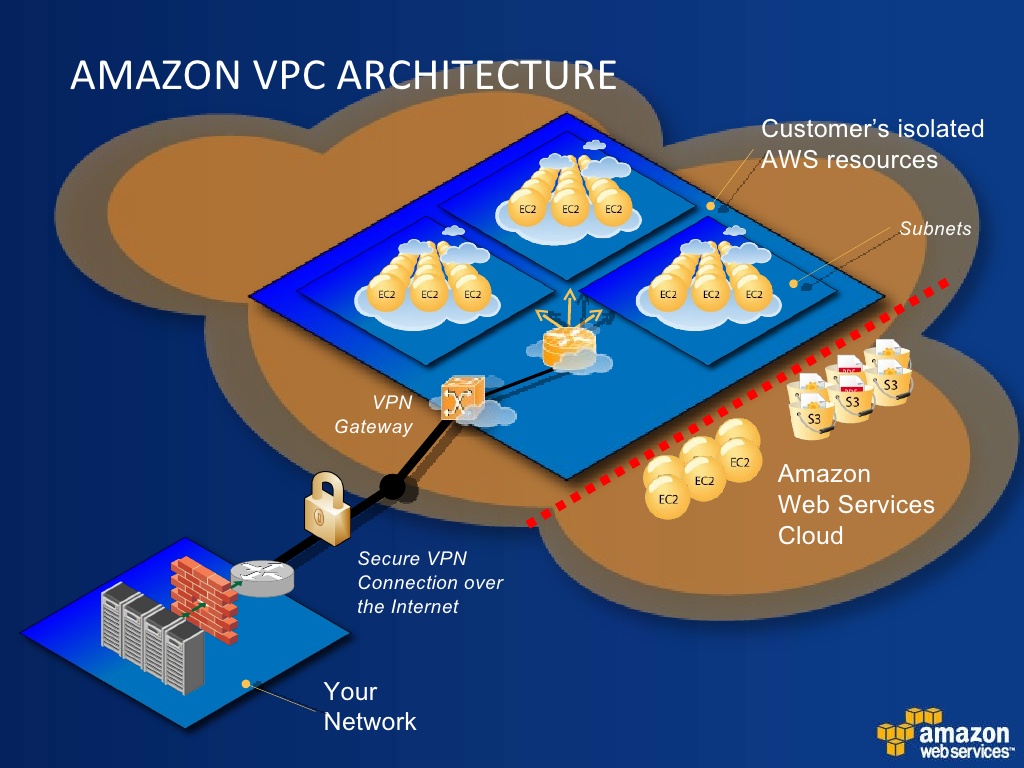

#4. Interaction between private and public clouds

When resorting to cloud computing, most companies do not externalize all of their data. Considerable amounts of information remain in the so-called “private” cloud, which means that it is still physically stored within a company’s private network.

As far as data between a private and a public cloud migrates very often, this process should be as seamless as possible. On top of that, it should meet your security standards. These important details should be arranged before clinching a cloud computing deal.

But there is more to that. Imagine that a company decides to back up its local data in the cloud

or save a public cloud data locally. This definitely guarantees better safety of data, but the issue

of synchronization steps in. You do not want to update your data twice and would prefer automatic synchronization with the cloud. A good cloud computing provider is the one that enables such kind of services to you.

An enterprise-grade Amazon Virtual Private Cloud architecture (Image credit)

An enterprise-grade Amazon Virtual Private Cloud architecture (Image credit)

Why Amazon Web Services?

With the wide range of cloud computing services available in the market today, IT executives sometimes do not know where to look first. In this case, you’d better start with the services that

enjoy the greatest popularity and best reputation today—Amazon Web Services (AWS).

Amazon Web Services are based on Amazon’s own time-proven infrastructure.

Amazon Web Services allow companies to use an effective, time-proven computing infrastructure that Amazon has been using for their own global network of websites. Top three most popular cloud computing services from Amazon include the following.

Amazon Simple Storage Service (S3)

Amazon S3 is a cloud storage that allows “storing any amount of data and retrieve it from anywhere on the web.” Unlimited number of objects ranging from 1 byte to 5 GB in size can be stored. To sort objects, S3 allows grouping them into buckets, which are very similar to local folders.

Amazon S3 enables access restrictions for each of your objects, with a range of available ways to access objects: from HTTP requests to using the BitTorrent protocol. Amazon also ensures high availability of S3 users’ data, guaranteeing 99.9 percent uptime, measured monthly. The service is available for a very affordable cost: $0.12–$0.15 per 1 GB.

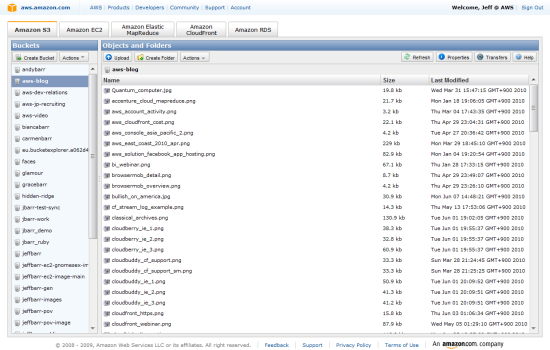

AWS management console: S3 (Image credit)

AWS management console: S3 (Image credit)Releasing companies from the necessity to worry about storage space, uptime, or server maintenance costs and providing great economy, Amazon S3 gains great popularity with a broad spectrum of clients. WordPress.com, Twitter, Slideshare.net, alongside with thousands of others, are among its users. SmugMug, a photo hosting service, claims to have saved over $1,000,000 on storage costs after just 7 months of using Amazon S3.

Amazon Elastic Compute Cloud (EC2)

Amazon EC2 is a web service that enables its customers to run and host their applications in the cloud rather than on the local machines. It means that customers use a web interface to administer a virtual machine provided by Amazon.

IBM uses Amazon EC2 to provide pay-as-you-go access to its database and content management software.

Companies of all sizes have appreciated the opportunity of using massive computer arrays by purchasing access to them, without having to acquire any hardware. EC2 makes it possible to create high-demand web applications with thousands of users more affordably than ever before. The New York Times relies on Amazon EC2, as well as the S3 storage service, to host its TimesMachine, which provides access to the newspaper’s public archives. IBM uses Amazon EC2 to provide pay-as-you-go access to its database and content management software, such as IBM DB2 and Lotus.

EC2 offers a wide range of virtual machines so that it is possible to choose a configuration best suited for any specific application. This, as well as the fact that the number and types of virtual machines can be changed at any moment, helps Amazon EC2 achieve maximum scalability. Each client can buy just the resources they need, and, when their needs change, they can change the number of instances in virtual use, accordingly. To ensure resistance to failure, instances can be placed in different geographic locations and time zones.

The EC2 environment is based on Xen, an open source virtual machine monitor. Amazon EC2 allows you to use and create your own Amazon Machine Images, or AMIs, which serve as templates for your instances. While most AMIs are based on Linux, other operational systems, such as OpenSolaris and Windows Server, are also supported.

The costs of using EC2 consist of an hourly charge per virtual machine and a data transfer charge. The “pay-as-you-go” system and great scalability opportunities have contributed to Amazon’s naming their cloud “elastic.”

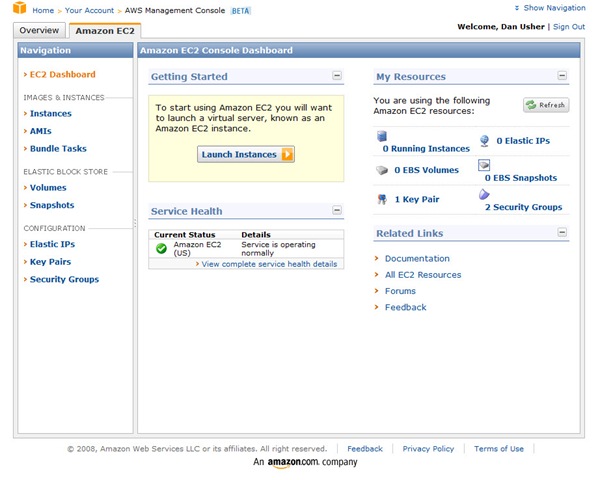

AWS management console: EC2 (Image credit)

AWS management console: EC2 (Image credit)

Amazon SimpleDB

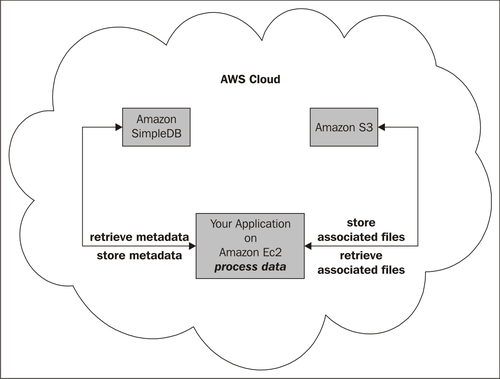

Amazon SimpleDB is a service for storing, processing, and querying structure datasets, designed to be used in concert with Amazon EC2 and S3.

Not a relational database itself, SimpleDB provides all the functionality of a database, such as real-time lookup and querying of structured data, without the operational complexity. With SimpleDB, there is no need for schemas; the service automatically indexes your data and provides an easy-to-use API for storage and access. The data model is as simple as the service’s name suggests: collections of “items” (small hash tables containing attributes-value pairs) are organized into “domains.”

SimpleDB is impressively fast, even when dealing with large amounts of data. As with S3 and EC2, it offers on-demand scaling: SimpleDB customers are charged only for actual data storage and transfer. Transferring data to other Amazon Web Services is free of charge.

Using SimpleDB together with EC2 and S3 (Image credit)

Using SimpleDB together with EC2 and S3 (Image credit)

The challenges and mistakes to avoid with AWS

Virtue to their high reliability and cost-saving approach, Amazon Web Services are becoming increasingly popular with companies on both sides of the Atlantic. However, while some things Amazon Web Services make easier to achieve, some things are quite complicated for a newbie.

Here are some typical challenges that new Amazon’s cloud computing service customers face and typical mistakes they make.

Scalability does not mean autoscaling

Mistake: Some IT executives forget that scalability and adjusting the resources that you use are never done automatically. With cloud computing, there still has to be a person who monitors an application’s performance and the resources used. For example, as soon as an application run in EC2 needs more memory, this person should see how much more memory is needed and add a virtual instance of appropriate size.

Without human management or a custom automation application, there is still a risk of not providing enough resources for an application or overpaying for odd resources.

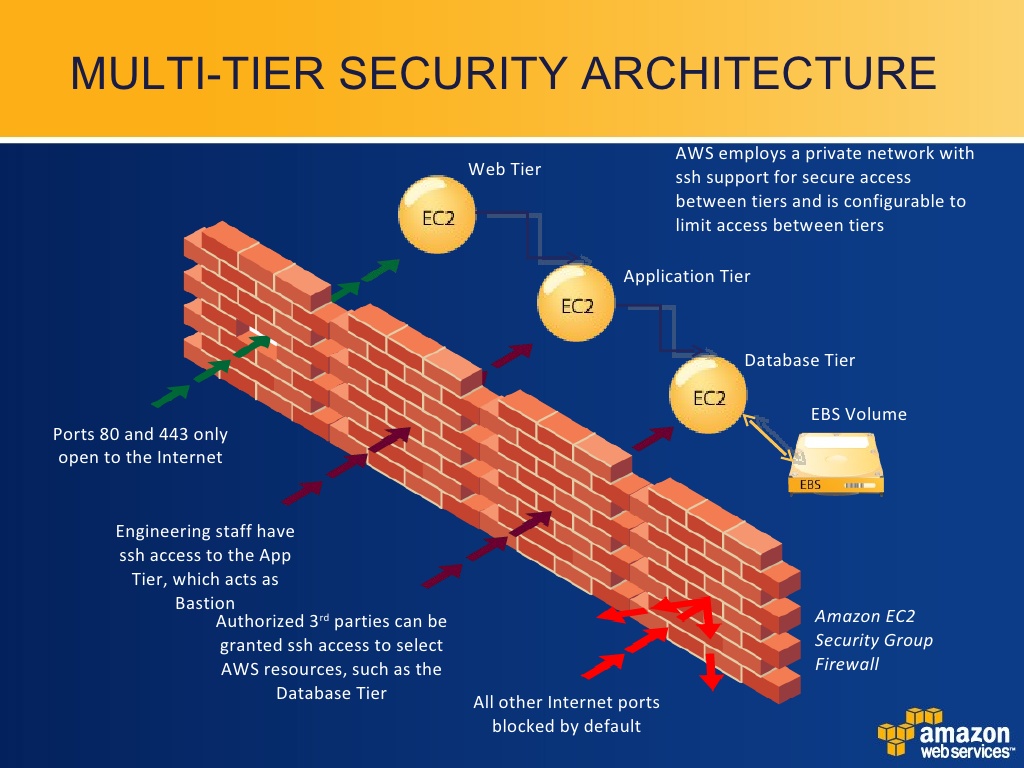

Disregarding safety of data

Mistake: Some people consider backing up as an unnecessary formality.

Creating backups and undertaking security measures are still relevant as ever before.

That is true, Amazon has a great reputation: no complaints of data loss, corruption, or unavailability have been ever heard from its clients so far; but the point is, should anything happen to your data due to performance of your application, bugs, or unforeseen customer efforts, the data will be lost. Therefore, creating backups and undertaking security measures are still relevant as ever before.

Implementing multi-tier security on AWS (Image credit)

Implementing multi-tier security on AWS (Image credit)

Nonstandard URLs and indexing

Challenge: Operating large volumes of dynamic data, Amazon Web Services may create nonstandard and dynamic URLs, which can be an obstacle for smooth search engine optimization.

Solution: Whenever it is possible, rewriting URL is a very helpful practice that is likely to solve the problem. URLs rewritten to look standard are both user-friendly and work better with search engines.

Another useful thing to do is create an RSS feed and a sitemap, which will help index your pages faster. Amazon even provides some ready-to-use code for an RSS feed, but creating a custom feed is probably what the most expert developers will do.

Additionally, consider joining the Amazon Associates program. It is an easy way to boost your search engine ranking by getting connected to millions of Amazon’s own pages. Joining in is free, but the price you pay is the necessity to put advertisements of Amazon’s products somewhere on your website. This is, in fact, the principle Amazon Associates works: you make links to Amazon and get paid for every click, your growing search engine ranking being the side effect. For many companies, engaging in the program may not be an appropriate option, but for some it can prove to be a winning decision.

Conclusion

While cloud computing has the potential to provide a range of benefits to businesses of any size and any industry, the cloud has its own hidden challenges. Those who want to make good use of it should be ready to avoid them to benefit from this promising technology.

Once done, cloud computing efforts may turn into a huge winner, saving considerable amounts of money, increasing you applications’ performance, and providing 24/7 availability and scalability.