Analyzing Satellite Imagery with TensorFlow to Automate Insurance Underwriting

Image classification models

Beauty is in the eye of the beholder. While people are blessed with a gift of vision, which comes at almost no effort, computer vision is a hard science to study. Enabling a machine to recognize and distinguish between different images and the objects in these images requires advanced instruments to facilitate the job of those dealing with machine learning.

Over the recent decade, we observed a great leap forward in the fields of deep and machine learning, which brought around a variety of tools to improve image recognition and classification and apply it to real-life problems across multiple industries.

At the recent TensorFlow meetup in London, Zbigniew Wojna of TensorFlight overviewed some working methods to achieve reasonable accuracy of image recognition. For instance, Inception-v3 that reaches only 3.46% of image classification error for top 5 metrics. Zbignew took part in a project at Google, where the Inception-v3 model showed desirable results while recognizing street signs to update Google maps.

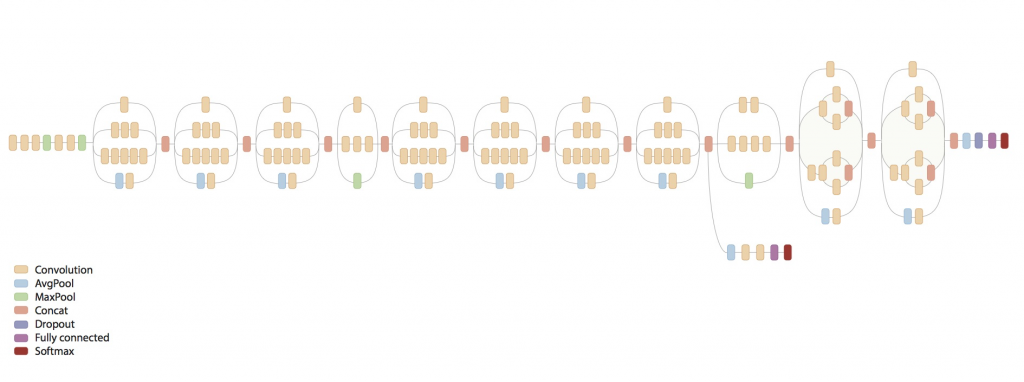

Inception-v3 high-level architecture (Image credit)

Inception-v3 high-level architecture (Image credit)“So, we process our shots with part of Inception, we cut it after 14 layers. Inception is a very efficient network, it has the group convolution, which allows for much faster processing—up to three times. But we don’t want to predict cats, faces, so we don’t need so many layers, and we can cut on just a few layers, and it works really well for text, as well.” —Zbigniew Wojna, TensorFlight

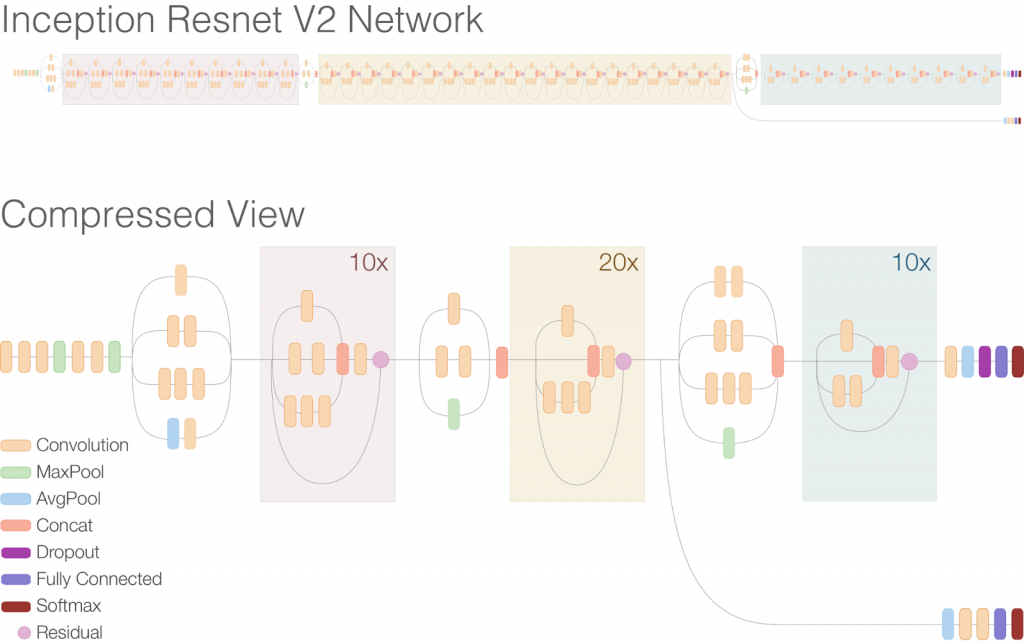

To further improve an image classification model, one may employ batch normalization, which is reported not to compromise on accuracy, while reducing training steps by 14x. Another outstanding model is Inception ResNet-v2—combining the Inception architecture and residual connections—which achieves 3.8% error rate across top 5 metrics.

A high-level architecture of Inception ResNet-v2 (Image credit)

A high-level architecture of Inception ResNet-v2 (Image credit)So, how it all works to solve real-world problems?

Insurance bogged down by manual property inspection

When evaluating policies, insurance and reinsurance companies may deal with property and know little or no information about the building themselves, which may put them at high risks. For instance, such information includes building footprint, number of storeys, construction type, or square footage. Manual inspection of just one building may take a day, multiply it across all the property an insurance / reinsurance company provides services to. Thus, manual inspection is quite ineffective in terms of both human resources and time needed.

As part of his talk, Zbigniew shared some insights into the solution—TensorFlight—his company is building to automate the process of property inspection and reduce the underwriting life cycle.

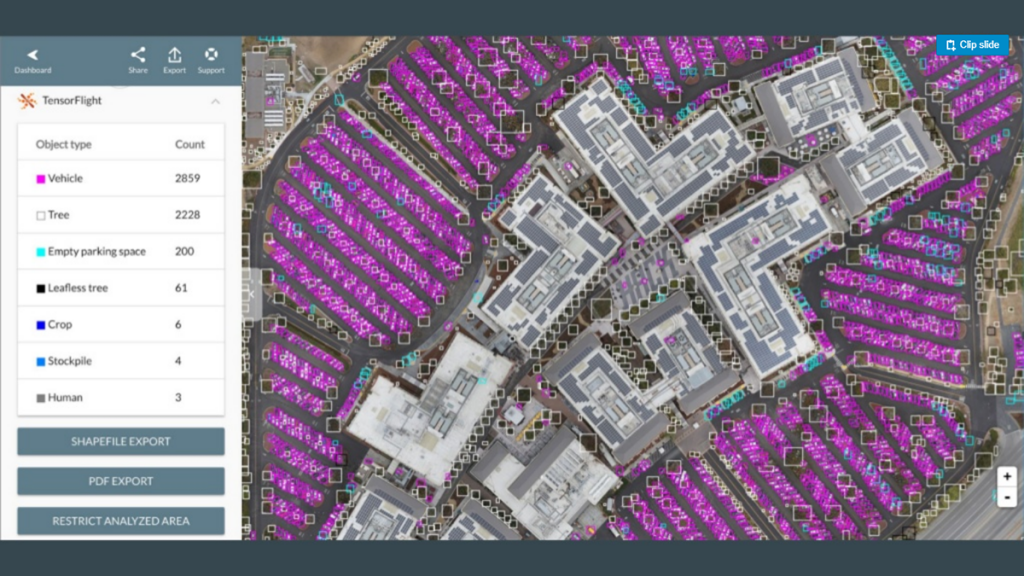

TensorFlight’s dashboard (Image credit)

TensorFlight’s dashboard (Image credit)This project is designed to analyze satellite, aerial, drone, and street view imagery of buildings all over the world to provide insurance with risk-related details of property. With image recognition and classification powered by TensorFlow, the solution is able to analyze such risk-critical data as:

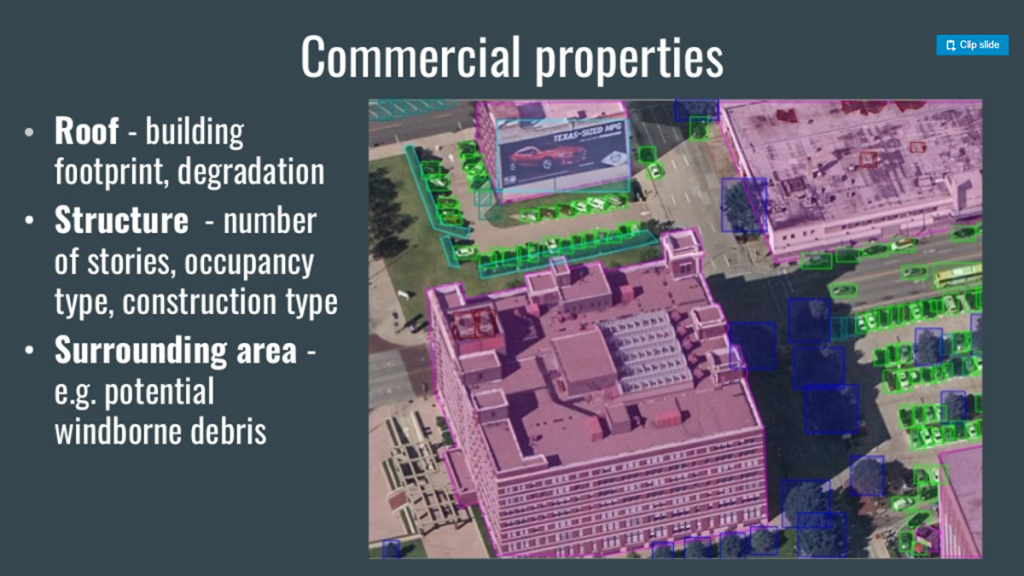

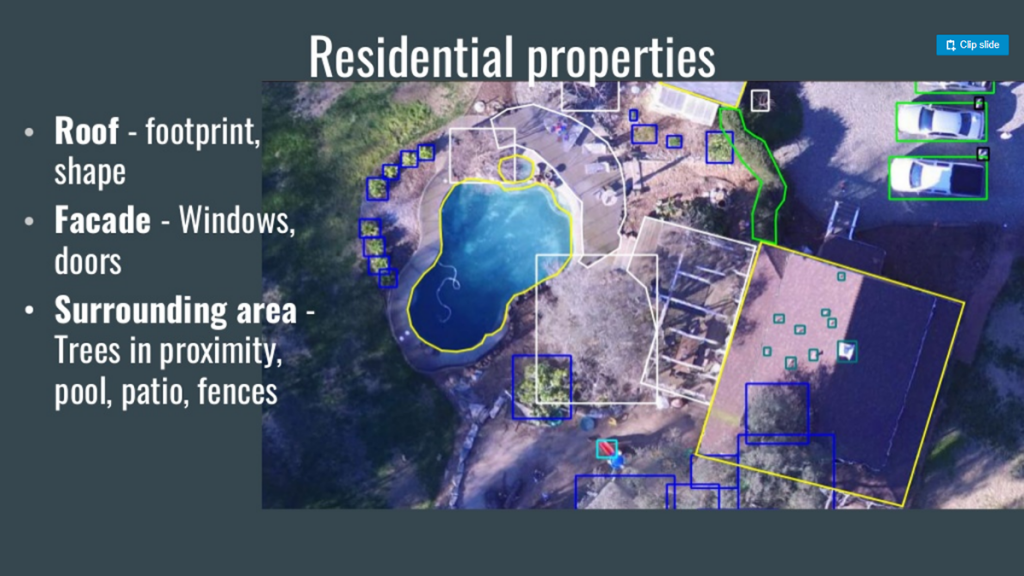

- building’s roof (e.g., age, shape, material, degradation, etc.)

- building’s structure (e.g., height, occupancy type, construction type, etc.)

- surrounding area (e.g., parking space or wind-borne debris)

Analyzing commercial buildings (Image credit)

Analyzing commercial buildings (Image credit)Apart from obvious criteria, TensorFlight allows for classifying those objects, posing hidden risks for insurance. Take greenery on the territory adjacent to the building. Residents may view trees as nice scenery, while insurers remember that trees may catch fire or fall down in a storm. The solution makes it possible to even distinguish between live trees and dead ones, as the chances of catching fire get higher in the latter case.

Analyzing residential buildings (Image credit)

Analyzing residential buildings (Image credit)Another example is a parking lot. Why would an insurer bother? If one needs to estimate activity around the building, counting empty parking spots may be of help. For multi-storey building, a number of doors or gates means a number of exits in case of a fire, an earthquake, a collapse, or any other emergency.

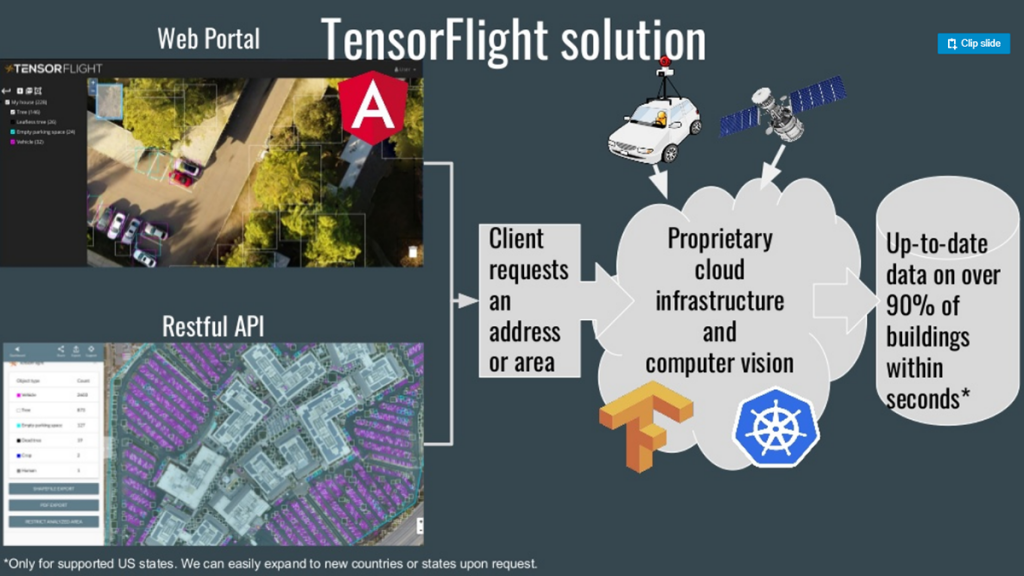

The working mechanisms behind TensorFlight (Image credit)

The working mechanisms behind TensorFlight (Image credit)“We look at the aerial, satellite, street view, and oblique imagery to predict different features. Some features that may be relevant are a roof type, a roof slope, or a swimming pool. Because if you have a swimming pool, it’s a more expensive policy, and there is a bigger chance you will have an accident. And these things are kind of very important for insurance.” —Zbigniew Wojna, TensorFlight

Surely, buildings are prone to wear and tear for natural reasons, as well as surrounding areas change with time. So, it is important to analyze up-to-date data. TensorFlight claims to have a database of high-quality imagery from 2 to 12 months old depending on the location.

According to the project’s documentation, the system is able to achieve 90% of accuracy in image recognition and classification. As already mentioned, the solution is driven by TensorFlow, while other technologies under the hood include Kubernetes, PostGIS, AngularJS, etc. (Previously, we have written about automating deployment of TensorFlow models on Kubernetes.) For technical details, you may also check out TensorFlight’s GitHub repo.

TensorFlight already has 1,000+ users worldwide. Numerous research studies suggest that a market of similar solutions using drones will exceed the $1-billion line by 2020 in the insurance sector alone.

Want details? Watch the video!

Related slides

Further reading

- Automotive Insurance with TensorFlow: Estimating Damage / Repair Costs

- Using Machine Learning and TensorFlow to Recognize Traffic Signs

- Blockchain for Insurance: Less Fraud, Faster Claims, and New Models

About the expert

Zbigniew Wojna is a deep learning researcher and a co-founder of TensorFlight, a company that provides remote commercial property inspection for reinsurance enterprises based on satellite and street view imagery. His primary interest lies in finding and solving research problems around 2D machine vision applications. Zbigniew is currently in the final stage of his Ph.D. at University College London. Already with 1,000+ citations, his Ph.D. research was conducted in a tight collaboration with Google Research. In his Ph.D. career, Zbigniew has worked with DeepMind Health Team, Deep Learning Team for Google Maps in collaboration with Google Brain, and Facebook AI Research Lab in Paris.