Top Data Integration Challenges: Meet DQ, CDI, EAI, DW, and BI

This list of challenges is by no means exhaustive, so feel free to add your own.

Data quality (DQ)

1. Data quality criteria and standards are subjective

To create the rules for providing data quality, an organization must have a set of criteria and data standards. These metrics should estimate the value of aggregated data and eliminate data contention. The first challenge here is that information is commonly spread across multiple heterogeneous locations, and data quality requirements differ across the sources. The second challenge is that the concept of quality is subjective. That means the value of information can vary among users—i.e., among business and technical department. Therefore collaboration between all stakeholders is an issue while defining data quality metrics.

2. Unexpected data anomalies

Improving an organization’s data is impossible without adequate data cleansing, as far as there are lots and lots of incorrect data entries. The causes of data inaccuracy vary from systematic errors to false statements, from data misinterpretation to incorrect analysis results, and so on. We need to check the content, its currency and accuracy, as well as perform all necessary improvements. The process of data cleansing involves validating and correcting data values against established criteria, and the main goal is to automate this process. However, a lot of unexpected data anomalies require additional attention to providing proper data quality.

3. Duplication and contention

There is plenty of duplicate information across multiple sources, which is to be eliminated before reaching data warehouse. Otherwise, the explosion of data volumes is inevitable. Another problem here is that information from different locations may be conflicting. That is where implementing data quality metrics is extremely critical. To provide accurate data integration, Extract, Transform, and Load (ETL) or Enterprise Application Integration (EAI) tools are needed.

4. Having comprehensive data

The completeness of data is one of the main requirements for efficient business decision-making and planning. To improve existing data and avoid errors/losses/delays, the information which is missing or incomplete needs to be enriched. Additional pieces of default data should be loaded instead of blank values, as well as outdated information must be replaced. Other enrichment initiatives may include data categorization or managing data relevance, etc.

5. Keeping data up-to-date is an ongoing process

As far as data environment is fluid, maintaining data quality is an ongoing process. You need to keep your data up-to-date and constantly monitor information streams. The situation inside your data warehouse may be ever changing, either. The number and the titles of data fields may vary, legacy data may become outdated, and the infrastructure of every enterprise is evolving day by day. So, prepare for the best.

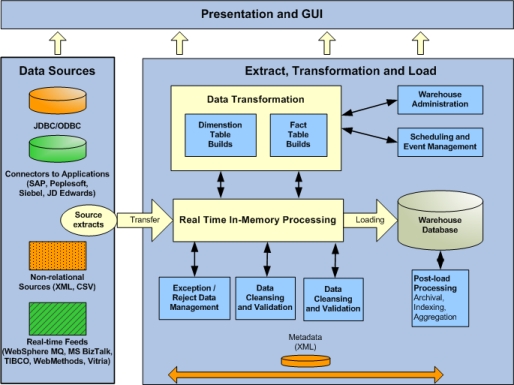

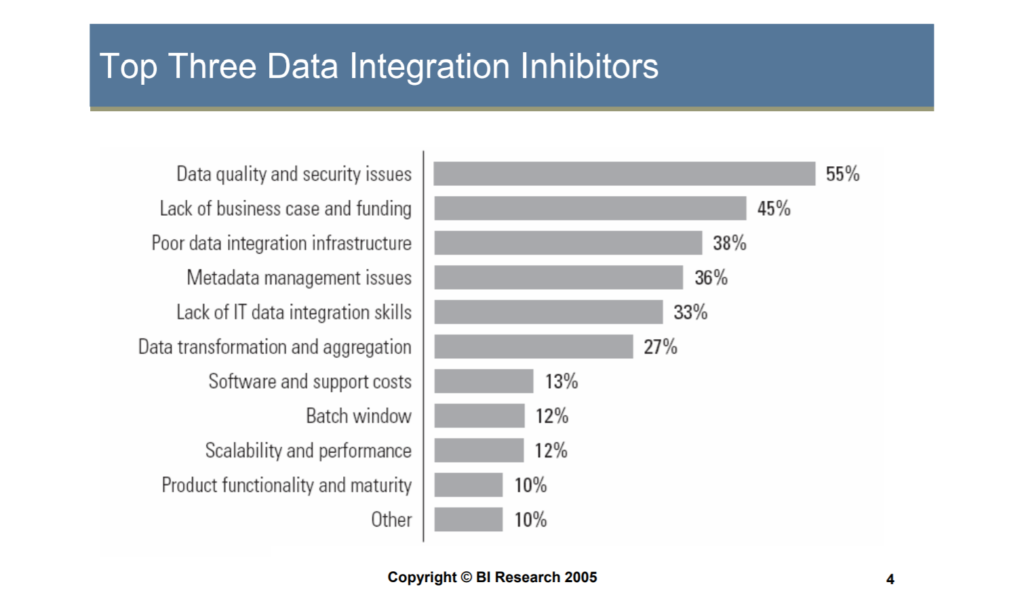

The factors slowing down the adoption and effectiveness of data integration (image credit)

The factors slowing down the adoption and effectiveness of data integration (image credit)

Customer data integration (CDI)

1. Customer data is spread throughout an organization

Customer intelligence is often stored within numerous data sources across the enterprise. This data can be located either inside or outside the company, which requires proper integration. According to Aberdeen, more than half of enterprises are struggling hard with extracting and normalizing customer data captured from multiple data sources. It is the most critical challenge while customer data intelligence.

2. Compatibility of data structures

Need to standardize the types of customer information is among top market drivers for Customer Data Management improvement, Aberdeen reports. Spread across informational silos, each data source maintains its own data structure and formats. And there are critical pieces of customer intelligence in these cells that are to be captured. That’s where customer data integration comes across another challenge, because efficient data profiling means data structure standardization and compatibility.

3. Data accuracy

With customer data integration, companies seek for an accurate representation of customer information across multiple business channels and enterprises. To meet this need, CDI tools must provide thorough data cleansing and eliminate duplications. However, a lot of problems occur if customer data values are contradictory. You need to define accuracy metrics and correction procedures, and data validation surely becomes an issue.

4. Data completeness

Very often, customer information turns out to be totally useless, especially if it lacks some critical data. Potential client’s name is valueless without address, or e-mail, or other contact information; as well as customer’s phone number is worthless without several characters missing. So, the success of CDI implementation depends heavily on prior data profiling. To provide efficient and comprehensive customer data integration, the proper data profiling standards and fixing are needed.

5. Data timeliness

To create and maintain an accurate and timely representation of a customer, we need to reconcile, standardize and cleanse customer data in almost real time. Data timeliness has a direct impact on the decision-making process, as far as the business environment is ever changing. But it is extremely challenging when data sources are spread across disparate sources and provide their own delays. That’s why real-time customer data integration and synchronization are anything but simple while supporting daily business operations.

Enterprise application integration (EAI)

1. Different app requirements, objectives, and APIs

Different processes and departments have different objectives and requirements for applications and interfaces. Very often, it is impossible to find a consensus, as far as requirements may be conflicting or departments may be reluctant to share confidential data with other participants. Furthermore, applications may have multiple integration points at several levels—such as data exchange, warehousing, interfaces, etc. Complex relationships and dependencies in enterprise applications require appropriate business process modeling and mapping. Application integration must include all relevant business processes integration, data integration and communication integration, providing that key relationships within data structures and applications are retained.

2. Data validation and transformation

Incompatible data formats create a technological obstacle for orchestrated business processes, so moving data from one source to another requires data validation and sometimes transformation. Combined with Extract, Transform, and Load (ETL) processes or Enterprise Service Bus (ESB), application integration achieves its goals. Before transferring data, applications verify that data meets all the criteria and is therefore useful. In many cases, data cleansing, or filtering, or correction, or enrichment are needed. Another related issue, which is even more complicated, is modification of data formats, or semantic mapping. It must be taken carefully so that none of the semantic values is lost during such reorganizing.

3. Establishing interoperability at run time

It is important to have a mechanism that manages transactions and launches appropriate processes if events in one application lead to actions in other applications. On the other hand, if several transactions fail, or unexpected events arise, your integration environment should remain stable and issue additional compensating transactions. The challenge is to provide such event handling and coordinate all the processes within the integration platform. Appropriate sequencing rules and schedules are to be developed to provide efficient application interactions.

4. Constant change and customization

The process of application integration is extremely dynamic and requires frequent changes in a variety of integration components. Data values are constantly changing, additional tables and fields may be embedded into applications, business processes may be reorganized, and so on. That’s why integration technology must be scalable and flexible, as well as able to manage large numbers of data sources spread across businesses, and perform synchronization. To provide such automated configuration and adaption capabilities, integration engine requires a lot of developer efforts and skills.

5. Embedding heterogeneous systems and platforms

One of the biggest IT challenge is the coexistence of Windows/Linux, as well as Java/Oracle/etc. platforms and legacy environments. Effective application integration is the one that eliminates the problems of integrating heterogeneous systems without standardizing applications on one sole platform. However, it is rather difficult, especially if these applications are owned and operated by different departments or by different companies. In this case, integration logic within integration framework must be extremely flexible.

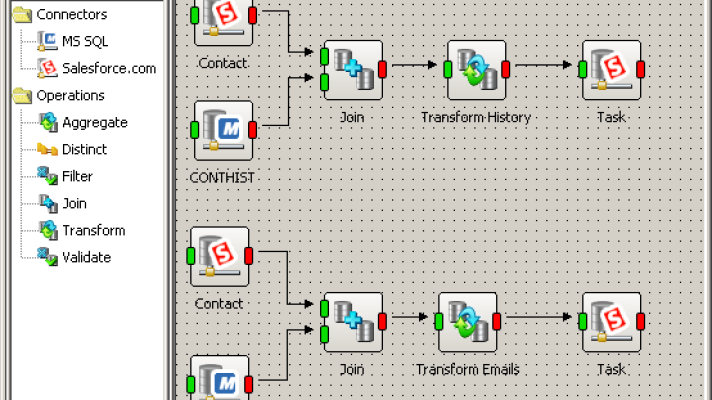

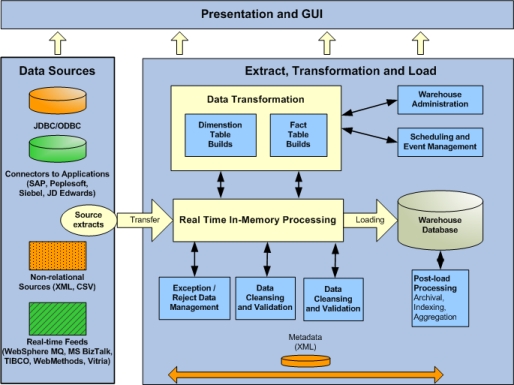

Image credit

Image credit

Data warehousing (DW)

1. Data Extraction, Transformation and Loading (ETL)

The processes of extracting, transforming, and loading the data are among the most challenging aspects of data warehousing. You often need to bring data out of the variety of sources, as well as transform and organize it so all data items fit together in the data storage. That’s where data warehousing deals with multiple data formats, heterogeneous sources, legacy issues, compatibility and so on. The news is that the number of enterprise data sources and formats is growing rapidly, both external and internal, and the problem escalates further.

2. Data quality

By all means, the data entered into the warehouse must be complete and precise. It is critical for every business process, as far as decision-making based on inaccurate information result in errors and losses. Thereby, the data needs to be cleansed before warehousing, and appropriate rules and criteria should be developed for these procedures. The major challenge for data warehousing is to provide data intelligence while some of the key data might be missing, incomplete, or not accurate. For instance, a lot of problems occur when customer information comes with abbreviations, nicknames, or unknown address formats, and such data needs to be transformed.

3. Correlating warehouse data to operational data

The volatility of business information and the need for real-time view require continuous data support and updating. Chief officers crave for the most current information about the market and enterprises, and real-time data accessibility is becoming a trend. With the fast pace of business today, the data warehouse must evolve to correlate warehouse data to operational data. To meet this need, data warehousing must be tied closely to operational business processes, while enterprises tend to transform all business relationships into digital form. The challenge is to keep up with all operational changes and to hold very detailed as well as current information. Today, if data sources are out of synch with the real-time situation, data warehousing becomes valueless.

4. Scalability and the limits of a data warehousing system

One of the biggest technology issues today is an unprecedented explosion of data, which creates extreme scalability challenges for data warehousing. As data volumes and warehouses grow, the hardware and software tools should become highly scalable. However, the physical size of a warehouse is not the only limit. The number of end users is growing rapidly and therefore query contention requires extra attention, too. When a data warehouse is hosted on an individual server, multiple reporting operations can easily overburden the server and its storage.

5. Integrating with business environment

Business intelligence (BI) is one of the most popular IT initiatives in enterprises today. Data warehouses are the foundation for BI tools, providing the information about the enterprise and its customers across all business channels and lines. The challenge is about data integration and rebuilding data infrastructures. It is crucial to ensure that all enterprise’s business tools and applications support all the necessary databases. Very often, companies run several legacy systems that were adopted at different times and, as a result, the data in these systems may be incompatible.

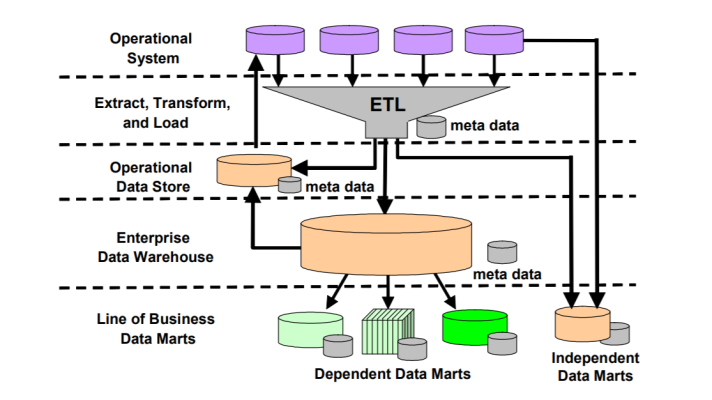

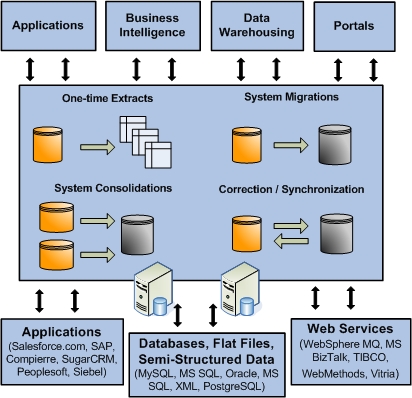

Image credit

Image credit

Business intelligence (BI)

1. Many data formats and structures are incompatible

Commonly, most enterprise’s information is stored in multiple and incompatible formats. Unstructured data such as e-mails, memos, documents, telephone records, terms and conditions of contracts, or customer feedback is spread across the enterprise and can be hardly integrated into a single day-to-day application. For better business decision-making, companies are thinking about integrating unstructured data with structured data so that it can be analyzed together. However, even structured data formats are evolving rapidly, due to data explosion and dynamic changes of data sources.

2. Consolidating multiple applications

Linking business processes may be challenging because of multiple heterogeneous APIs. Disparate databases, technologies and applications are deployed across all departments of the organization and require unification. To provide efficient and seamless consolidation, today enterprises need scalable integration technology instead of inflexible custom coding. A lack of infrastructure for data exchange or incompatibilities between systems can make the performance of business applications within enterprises complicated. Or, I’d rather say, exhausting.

3. Information quality and usability are critical

Poor information quality misleads and ruins decision-making, as well as promotes inefficiency and losses. Multiple versions of truth or duplication of data is not what you expect from your business intelligence initiatives. That’s why data quality needs to be controlled and monitored constantly to meet organization’s objectives. The challenge is to provide accurate rather than inconsistent or incomplete information. From comprehensive data mining to making data available anywhere in a timely manner, well-defined data quality processes are needed.

4. Risks and security

Though increasing business intelligence implementations seem to be inevitable so far, enterprise’s sensitive data gets more vulnerable. Multiple external and internal data sources may lack inherent security mechanisms, which makes data security one of the top priorities for organizations today. Business intelligence tools are expected to provide powerful security features and support for security standards such as secure encryption of information. Data access is another issue, as far as user population within BI environment is growing rapidly. Absolutely, determined and scalable security model is required to provide data safety as efficiently as possible.

5. BI requirements are constantly changing

As business environment is changing rapidly and enterprises are tightening their belts to stay competitive, business intelligence requirements are constantly evolving, as well. Permanent visibility and BI process scalability should be provided despite any time-critical market changes, which is not so easy. However, many enterprises take this challenge and tend to replace their passive reports and charts with active business platform based on adaptive IT architectures. For better or worse, business intelligence tools enhance business communication across enterprises, coordinate resources, and enable companies to interact more quickly in our ever-changing world.

Faced more challenges when integrating data within your organization? Share them in the comments.